Pdf Extract Text Python: A Guide for RAG Developers

pdf extract text python: A concise guide to extracting text from PDFs with PyMuPDF and friends, for clean data in high-precision RAG workflows.

You can pull text from a PDF with a few lines of Python using libraries like PyMuPDF (fitz) or PyPDF2. A basic script opens the file, loops through the pages, and uses a function like page.get_text() to dump everything into a string. Easy enough.

But for a Retrieval-Augmented Generation (RAG) system, that's just the first, most dangerous step.

Why Clean Text Extraction Is the Bedrock of RAG

Before you write a single line of code, let’s get one thing straight: your RAG system is only as good as the data you feed it. Getting text out of a PDF isn't just a box to check—it’s the foundation for high-quality retrieval. If you mess this up, nothing else matters.

Think of your RAG pipeline as a brilliant researcher. If you hand them a stack of papers where sentences are chopped in half, paragraphs are jumbled, and tables are a mess of garbled characters, you’re not going to get a coherent report back. The same exact logic applies to your AI.

The Downstream Impact on Retrieval Accuracy

Garbage in, garbage out. Sloppy text extraction injects noise that pollutes your entire pipeline, directly sabotaging retrieval performance.

- Fragmented Context: Imagine a two-column PDF. A naive script reads straight across, mixing the end of a sentence from column one with the start of an unrelated sentence from column two. This mangled text creates completely useless embeddings, making it impossible for your vector database to retrieve relevant context.

- Nonsensical Chunks: Bad extraction leads to bad chunks. If a sentence starts halfway through a paragraph and ends abruptly, the LLM has no hope of generating a sensible answer from that distorted mess. Your retrieval system will fetch these broken fragments, leading to poor-quality generation.

- Misleading Answers: This is the most dangerous part. Your system might confidently hallucinate an incorrect answer because its "knowledge" is built on a faulty foundation. When the original document's meaning and structure are lost, the RAG system can't retrieve the right facts.

The real goal isn't just pulling raw characters out of a file. It's about preserving the document's semantic integrity to enable precise retrieval. You need to give your RAG pipeline a clean, coherent version of the original source material. Only then can it find the right information.

For any developer serious about building a RAG application people can actually trust, moving beyond a basic extraction script is non-negotiable. By obsessing over clean, structured data from the very start, you set the stage for a retrieval system that delivers reliable, accurate information every time.

Choosing Your Python PDF Extraction Toolkit

When you're building a system to pull text from PDFs in Python, the number of libraries can feel a bit overwhelming. Each one has its own philosophy, its own strengths, and picking the wrong one can lead to a world of frustration, especially when you're feeding that text into a RAG pipeline.

The trick is to match the library's capabilities to what your project actually needs. For anyone building RAG systems, the decision really comes down to a few key things: raw speed, accuracy on digital PDFs, and whether it can preserve the document's original structure—a critical factor for retrieval quality.

<iframe width="100%" style="aspect-ratio: 16 / 9;" src="https://www.youtube.com/embed/GxWwBp8SNNA" frameborder="0" allow="autoplay; encrypted-media" allowfullscreen></iframe>Let's break down the most popular toolkits and see how they stack up for real-world development.

Python PDF Extraction Library Comparison

When you're evaluating libraries for a RAG pipeline, not all features are created equal. You're looking for the best combination of performance and output quality to ensure your retrieval step is as accurate as possible. This table gives a quick, head-to-head look at the main players.

| Library | Speed | Accuracy (Digital PDF) | Layout Preservation | Best For |

|---|---|---|---|---|

| PyMuPDF (fitz) | Excellent | High | Excellent (with coordinates) | High-performance, large-scale RAG pipelines where retrieval context is critical. |

| PyPDF2 | Moderate | Good | Basic | Simple, single-column documents where layout is not a concern. |

| pdfminer.six | Slow | Good | Very Good | Complex, multi-column layouts needing careful reconstruction for accurate context. |

| Tika | Moderate | Good | Basic | RAG systems ingesting many different file types, not just PDFs. |

As you can see, the "best" library is situational. But for most modern RAG applications where performance and structural integrity are non-negotiable, one library consistently comes out on top.

The Heavyweights: PyMuPDF and PyPDF2

PyMuPDF (fitz) is the undisputed speed demon. If you're processing thousands of documents and every millisecond matters, this is where you should start and probably finish. It’s built on MuPDF, a high-performance C library, which gives it a massive speed advantage over pure-Python options. For RAG, its ability to extract text with coordinate data is its most valuable feature for preserving context.

In the AI engineering space, Python has cemented its role for PDF text extraction, powering over 70% of data pipelines in RAG workflows, according to 2025 surveys from Stack Overflow and PyPI analytics. Libraries like PyMuPDF are at the forefront, capable of ripping through a 100-page digital PDF in under 0.5 seconds on standard hardware. That’s a 10x improvement over older tools, a finding backed by benchmarks we ran here at ChunkForge in early 2026.

Then you have PyPDF2. It’s often recommended for its simplicity and gentle learning curve. If your task is basic—like pulling all the text from simple, single-column reports—PyPDF2 gets the job done. The catch? It tends to struggle with complex layouts and is noticeably slower than PyMuPDF, making it a poor fit for high-throughput RAG systems where preserving structural context for retrieval is key.

Specialized Tools: pdfminer.six and Tika

While PyMuPDF handles most high-performance jobs, a couple of other libraries fill important niches.

pdfminer.six is a community-maintained fork of the original pdfminer, and its superpower is layout analysis. It’s brilliant at figuring out the spatial relationships between text blocks, which is critical for correctly reconstructing the reading order in documents with multiple columns. For academic papers or complex financial reports, this focus on structure can be a lifesaver for the quality of your retrieved text chunks.

And then there's Apache Tika. Think of Tika less as a PDF library and more as a universal content analysis toolkit. Its main advantage is versatility; it can chew through hundreds of file types, not just PDFs. If your RAG system needs to ingest a mix of documents (like DOCX, PPTX, and HTML files), Tika’s Python wrapper gives you a single, consistent way to handle all of them.

Key Takeaway: For most modern RAG applications, PyMuPDF (fitz) delivers the best combination of speed, accuracy, and detailed output. Its ability to provide coordinate data for every text block is invaluable for preserving a document's original structure, which is the first step toward high-quality retrieval.

So, here's a quick cheat sheet to guide your decision:

- For Maximum Speed & Retrieval Context: Go with PyMuPDF (fitz). It’s built for performance-critical jobs and huge document sets.

- For Simple Prototypes: PyPDF2 is a decent starting point for basic extraction, but be prepared to outgrow it quickly.

- For Complex Layouts: Look at pdfminer.six when you need to meticulously reconstruct the reading order to ensure contextual integrity.

- For Diverse File Types: Use Tika when your pipeline needs to be a universal content processor, not just a PDF reader.

Ultimately, the best tool is the one that solves your specific problem. Understanding these trade-offs from the start will help you build a more robust and effective retrieval system. To see how these tools work in practice, check out our in-depth guide to building a Python PDF reader.

Handling Complex PDFs: Tables, Layouts, and Scanned Documents

Sooner or later, every developer building a text extraction pipeline hits a wall. The simple scripts that breeze through clean, single-column documents just fall apart when they meet the messy reality of multi-column layouts, dense tables, and scanned pages.

This is where your extraction strategy needs to get smarter, especially if you're feeding this text into a RAG system.

When a basic text dump scrambles the reading order of a two-column research paper, the text chunks you create are complete nonsense. This garbage data poisons your vector embeddings, making accurate retrieval impossible. To fix this, you have to move beyond just grabbing text and start understanding the document's structure.

Reconstructing Reading Order with Coordinate Data

The secret to taming complex layouts for better retrieval lies in coordinate data, often called bounding boxes. Libraries like PyMuPDF (fitz) don't just hand you a wall of text; they can give you the exact (x0, y0, x1, y1) coordinates for every single word or block of text on the page. For a RAG pipeline, this is a total game-changer.

With these coordinates, you can programmatically piece together the intended reading flow. Instead of blindly reading left-to-right across the entire page, your script can sort text blocks first by their horizontal position (to identify the columns) and then by their vertical position (to read down each column).

This process ensures that sentences and paragraphs stay intact, preserving the original context your RAG system needs to retrieve accurate information. Getting this initial extraction right is critical for any downstream task. For instance, if you're trying to figure out how to translate a PDF and perfectly preserve its formatting, it all starts with a clean, well-ordered text foundation.

Taming Tabular Data for Precise Retrieval

Tables are another massive headache. A standard text extraction will flatten a table into a long, jumbled string of text, completely destroying the relationships between rows and columns. This makes it impossible for a RAG system to answer a query like "What were the Q3 revenues in 2024?" because the necessary context is lost.

This is where you bring in specialists. Tools built for table extraction convert them into a structured format like a pandas DataFrame or even Markdown. This preserves the data's integrity, making it possible to create highly specific, context-rich chunks for your RAG pipeline that enable precise, factual retrieval. For a deeper dive, check out our guide on extracting tables from PDF documents.

Building an OCR Fallback for Scanned Documents

Finally, you'll inevitably run into scanned PDFs—documents that are basically just images of text. Your standard text extraction tools will come up empty. The solution is to build a hybrid workflow that pulls in Optical Character Recognition (OCR).

A robust extraction pipeline should treat every page as a potential image. The goal is to attempt digital extraction first for speed and accuracy, but have an OCR engine like

pytesseractready to process any page that fails the initial check.

A smart workflow for comprehensive data retrieval looks something like this:

- First, try extracting text from a page using PyMuPDF.

- Check the result. Is the returned text empty or just a few garbage characters?

- If so, use PyMuPDF again, but this time to render the page as a high-resolution image.

- Finally, pass that image over to

pytesseractto perform OCR and get the text.

This two-step process creates a resilient system that can handle both digitally native and scanned documents, ensuring no information gets left behind. By tackling layouts, tables, and scanned images head-on, you build a foundation of clean, reliable data that will empower your RAG system to deliver truly accurate results.

Advanced Extraction Techniques for Better RAG Retrieval

To build a RAG system that actually works, you need to get beyond just dumping raw text into a vector database. High-quality retrieval comes from data that’s rich with context, and that’s where advanced extraction comes in. This is what separates a quick demo from a production-grade system that gives people accurate, verifiable answers.

Think of it as intelligently processing your PDFs before you even think about chunking. By treating the document as a structured source of information instead of a flat text file, you can surgically cut out the noise and inject valuable context. This directly boosts retrieval performance.

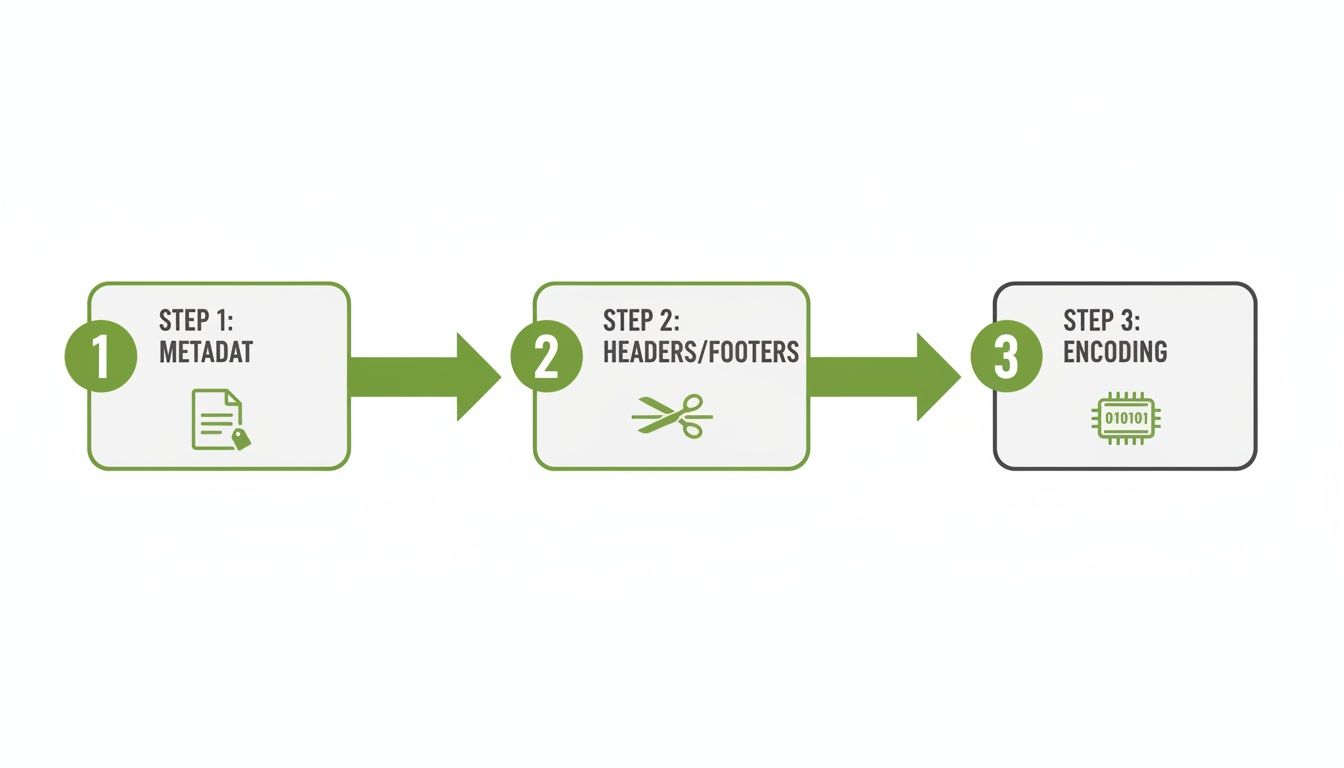

Going Beyond the Text Body

The text itself is just one piece of the puzzle. Most PDFs are full of metadata that can be a goldmine for improving RAG performance, especially for filtering results and tracking down sources.

- Extracting Document Properties: Most libraries can easily peek into the document's info dictionary. You can pull out the

Author,Title,Subject, andCreationDatewith just a few lines of code. Attaching this metadata to your chunks enables powerful filtered queries in your vector database, allowing users to narrow searches by source or date—a huge win for retrieval accuracy. - Identifying Headers and Footers: Recurring headers and footers are pure noise. They add zero semantic value but create a ton of confusion for your embeddings. Page numbers, copyright notices, and document titles repeated on every single page will only dilute the meaning of your text chunks and lead to irrelevant retrievals.

You can—and should—programmatically find and discard this stuff. A common technique is to analyze the text blocks on the first and last few pages to identify patterns in content and position. Once you've identified a recurring header or footer, you can create a rule to ignore any text blocks appearing in that specific bounding box on all subsequent pages.

Handling Textual Artifacts and Encoding

Even when the structure is clean, the text itself can be a minefield of subtle issues that will poison your embeddings and harm retrieval. Two of the most common culprits are ligatures and encoding errors.

A ligature is when two or more letters get squished into a single character, like 'fi' or 'fl'. A naive text extraction might misread these, turning a word like 'file' into 'le'. It seems like a tiny error, but it's enough to make that word completely invisible to semantic search.

Encoding problems are just as bad, sneaking in weird characters (â€", ®) that break sentences and mangle meaning. Modern libraries have gotten much better at handling this, but running your text through a final cleaning step with a library like ftfy to fix Unicode mistakes is a non-negotiable best practice for any serious pipeline.

The core idea here is to treat text extraction as a data refinement process. By stripping out irrelevant junk like headers and fixing textual artifacts like ligatures, you create a much cleaner signal for your embedding model. This leads directly to more precise retrieval.

The pypdf library, which has come a long way and now boasts over 2 million monthly PyPI downloads, has solid features for this. Its visitor_functions, for example, allow you to inspect text elements as they're extracted, analyzing position and font style to programmatically identify and remove headers or footers. This makes it a reliable workhorse for cleaning complex documents where getting the text right is everything. I'd recommend checking out the official documentation to explore its more advanced features.

From Extracted Text to RAG-Ready Chunks

Getting the text out of a PDF is really only half the battle. Now comes the critical part: turning that raw dump of text into clean, optimized chunks that your Retrieval-Augmented Generation (RAG) system can actually use. This is where your hard work on extraction truly pays off.

The chunking strategy you land on will directly and significantly impact your retrieval accuracy. It's a make-or-break step. A naive fixed-size approach can easily slice a sentence right down the middle, destroying its meaning and making the resulting chunk useless for retrieval. The goal is to create chunks that are both semantically complete and small enough for an LLM to digest.

Optimizing Chunks for Contextual Retrieval

Look, the best RAG systems don't just split text arbitrarily. They rely on intelligent chunking that respects the document's structure, which you've worked so hard to preserve.

- Recursive Chunking: This is a solid starting point. It tries to keep related text together by splitting content hierarchically, first by double newlines (paragraphs), then single newlines, and so on. This respects the natural paragraph breaks in your clean, extracted text.

- Semantic Chunking: A much more advanced technique. It uses embedding models to group sentences by their similarity, creating chunks that are genuinely coherent and on-topic, which is ideal for precise retrieval.

- Heading-Based Chunking: If your PDFs have a clean structure (H1, H2, etc.), you can use those headings as natural boundaries. This ensures all the text under a specific header stays together, creating highly contextual chunks.

Before you even get to chunking, though, there are a few foundational data processing steps you need to nail down.

As you can see, enriching your data with metadata, stripping out noisy headers and footers, and fixing any encoding problems gives you a much cleaner text stream to work with. Get this right, and your retrieval accuracy will improve dramatically.

This is where a tool like ChunkForge can save you a ton of time. It’s built to streamline this whole workflow, letting you visually test different chunking strategies, tweak the overlap, and immediately see how your chunks map back to the original PDF. That instant feedback is invaluable for quality control.

Here's a pro tip: Enrich your chunks with metadata about their location in the document (e.g., page number, section heading). This creates a much more context-aware dataset for your RAG system, allowing for precise filtering that leads to way more accurate retrieval. You can dive deeper into the nuances by exploring different chunking strategies for RAG.

Once your text is perfectly extracted and chunked, the next skill to master is prompt engineering to make sure you’re getting the most effective results from your AI.

Common Questions About Python PDF Extraction for RAG

When you're wrestling with PDFs, especially for a RAG pipeline, you start to see the same problems crop up again and again. Getting past these hurdles quickly is the key to building something that actually works well.

Let's walk through the most common questions and how to solve them.

Which Python Library Is Best for Scanned PDFs?

There's no single silver bullet for scanned, image-based PDFs. You're going to need a hybrid approach to ensure complete data capture for your RAG system.

Your best bet is to start with a library like PyMuPDF (fitz) and loop through the document page by page. It's incredibly fast at pulling out any digitally native text.

But what about the scanned pages? When page.get_text() comes back empty or with just a few garbled characters, you've found an image. From there, you can use PyMuPDF to render that specific page as an image and pass it directly to an OCR library like pytesseract. This two-step dance ensures you retrieve text from both native and scanned sources, creating a comprehensive knowledge base.

How Do I Preserve the Original Layout and Reading Order?

Getting the layout wrong can completely poison your RAG system's context. If your chunks are out of order, the retrieved context will be fragmented and confusing. To get this right, you have to work with a library that gives you coordinate data—often called bounding boxes—for every piece of text.

PyMuPDF's

get_text('dict')method is a lifesaver here. It returns a detailed dictionary for each text block, complete with its coordinates on the page. You can then write a bit of Python to sort these blocks, first by their horizontal position (to handle columns) and then vertically. This reconstructs the natural reading order before you even think about chunking, ensuring the context you retrieve is coherent.

Why Is My Extracted Text Full of Strange Characters?

If your output looks like gibberish—weird symbols, missing spaces, or garbled words—it's almost always one of two things: a character encoding mismatch or PDF artifacts like ligatures (where "fi" or "fl" gets turned into a single symbol). Both issues can prevent your retrieval system from matching queries to the text.

First, the easy check: always make sure you're decoding the extracted text as UTF-8. That solves a surprising number of issues right off the bat.

Second, modern libraries like PyMuPDF have gotten much better at handling ligatures automatically. If you still see problems, run the extracted text through a cleanup library like ftfy to fix common Unicode errors. This simple post-processing step ensures that the text you embed is clean and searchable, maximizing your chances of successful retrieval.

Ready to skip the tedious setup and get straight to creating perfectly optimized, RAG-ready chunks? ChunkForge provides a visual studio to experiment with multiple chunking strategies, enrich data with deep metadata, and instantly verify your results. Start your free trial today and transform your raw documents into high-quality AI assets. Find out more at https://chunkforge.com.