A Practical Guide to Retrieval-Augmented Generation

Discover how retrieval-augmented generation (RAG) builds smarter, more reliable AI. This guide provides actionable strategies to improve your RAG systems.

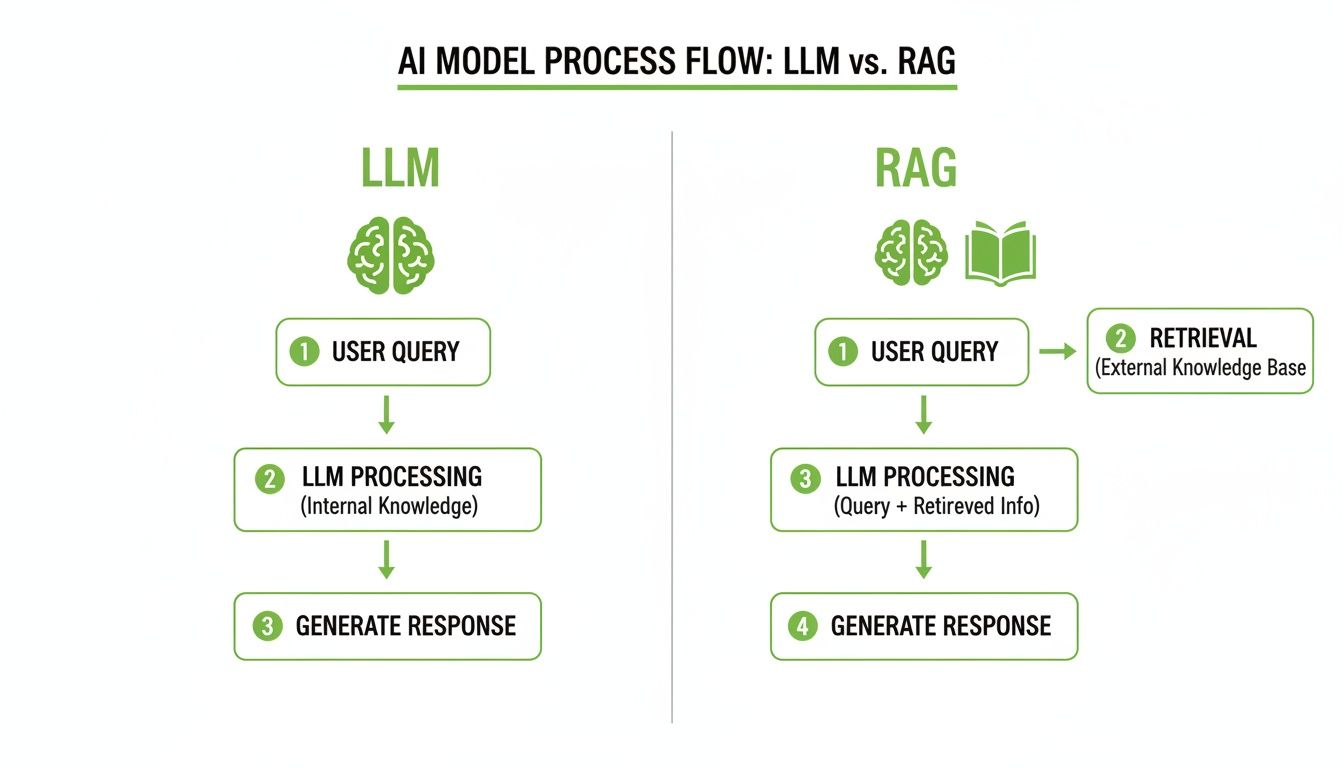

Retrieval-augmented generation is a technique that gives your generative AI models access to external, real-time information. This simple move transforms a standard Large Language Model (LLM) from a closed-book test-taker into an open-book expert. Instead of just recalling what it was trained on, the model can now ground its answers in verifiable facts.

Why Retrieval-Augmented Generation Matters

Picture a standard LLM as a brilliant student who memorized an entire library but stopped reading new books a year ago. Their knowledge is massive, sure, but it’s also stale and missing any specific, proprietary context you might need. This is a huge roadblock for engineers building real-world applications; without current info, LLMs are known to "hallucinate"—they just make up plausible-sounding but completely wrong facts.

Retrieval-augmented generation (RAG) is the direct solution to this problem. Rather than retraining a colossal model—which is incredibly expensive and slow—RAG gives it a curated, real-time library to check before it generates a response. It’s a simple shift in process, but the implications are profound.

Addressing Core LLM Weaknesses

RAG isn't just a minor tweak; it fundamentally overhauls how AI systems find and use information. It brings some serious benefits to the table.

- Combating Hallucinations: By grounding responses in actual, retrieved documents, RAG drastically cuts down the risk of the model inventing answers. The AI is told to base its output on the text provided, which keeps it honest and accurate.

- Accessing Real-Time Data: LLMs are basically frozen in time, with a hard knowledge cutoff date. RAG hooks them up to live data sources, letting your application answer questions about recent events, updated company policies, or brand-new product specs.

- Providing Verifiable Sources: One of the best features of a solid RAG system is its ability to cite sources. This builds user trust by letting people check the facts for themselves—an absolute must-have for any serious enterprise or customer-facing tool.

Before we dive deeper, let's summarize the key problems with standalone LLMs and how RAG directly tackles them.

Core Problems Solved by RAG

| LLM Challenge | How RAG Provides a Solution |

|---|---|

| Knowledge is Static | Connects the LLM to live, up-to-date data sources, bypassing knowledge cutoffs. |

| Prone to "Hallucinations" | Grounds responses in retrieved factual content, forcing the model to rely on evidence. |

| Lacks Domain-Specific Context | Injects proprietary, private data (like internal docs) into the prompt at runtime. |

| Responses are a "Black Box" | Enables source citations, allowing users to verify where the information came from. |

Ultimately, RAG gives developers a practical way to build reliable, trustworthy AI without the immense cost of continuous model retraining.

A RAG system transforms an AI's task from pure recall to informed synthesis. It’s the difference between asking someone a question off the top of their head versus letting them quickly consult an encyclopedia before they answer.

The market's excitement totally reflects this impact. While valued at USD 1.3 billion in 2024, the RAG market is projected to explode to an incredible USD 74.5 billion by 2034, growing at a blistering 49.9% CAGR. This kind of growth shows just how essential RAG has become for building production-grade AI systems. You can get more details on these market projections and what they mean.

For engineers, this technology unlocks a whole new class of intelligent apps. Imagine a customer support bot that knows your company's latest policy updates the second they're published, or an internal knowledge base that gives employees verifiable answers from your team’s documentation. Getting good at RAG is no longer optional—it's essential for building AI that is not just powerful, but also trustworthy and grounded in reality.

How a RAG System Actually Works

So, how does this all come together? The "open-book exam" analogy is a great starting point, but to really get it, you have to look under the hood. A RAG system isn't just one thing; it's a carefully orchestrated pipeline that turns raw documents into smart, accurate answers.

Think of it like a research assistant's workflow.

This diagram shows the core difference between a standard LLM just making things up and a RAG system that actually looks things up first.

That retrieval step is everything. It's what keeps the LLM's response tethered to reality—your reality, based on your data.

Step 1: Data Ingestion and Chunking

It all starts with your knowledge base. This could be anything: a folder of PDFs, a database of customer support tickets, or transcripts from your last 100 sales calls.

You can't just feed a 300-page manual to an LLM. Its "context window"—think of it as short-term memory—is finite. This is where chunking comes in.

Chunking is the art and science of breaking down massive documents into smaller, digestible pieces. The goal is to create chunks that are big enough to hold a complete thought but small enough for the model to work with. If you get this wrong, you end up with fragmented ideas and useless search results. It's a critical first step.

Step 2: Creating Embeddings

Okay, your documents are now in neat little chunks. But how does a machine understand what they mean? That's where embeddings come into play.

An embedding model, which is a specific kind of neural network, reads each text chunk and converts it into a long list of numbers called a vector. This isn't random; this vector is a mathematical representation of the chunk's semantic meaning.

Chunks of text that talk about similar concepts will have vectors that are numerically "close" to each other in a massive, multi-dimensional space.

This is the secret sauce behind semantic search. It's what allows the system to find content that is conceptually related to a user's question, even if the exact keywords don't match.

Step 3: Indexing in a Vector Database

You now have a mountain of text chunks, each with its own numerical vector. You need a place to store them where they can be searched in milliseconds. That's the job of a vector database.

A vector database is a purpose-built system designed to store, index, and query millions or even billions of these high-dimensional vectors at incredible speeds. When you load your vectors into it, the database organizes them so finding the "closest neighbors" to a new query vector is brutally efficient.

It’s like a library organized by ideas instead of the alphabet.

Step 4: The Retrieval and Generation Flow

With all the prep work done, your RAG system is ready for action. When a user asks a question, here’s what happens in a split second:

-

Query Embedding: First, the user's question (e.g., "What were our Q3 revenue goals?") gets converted into a vector by the very same embedding model. Now the question and the documents are speaking the same mathematical language.

-

Vector Search: The system shoots this query vector over to the vector database. The database instantly compares it to all the document vectors it stores and finds the ones that are most similar—the top

kmatches. -

Context Augmentation: These top-matching chunks of text are pulled out and stitched together with the user's original question. This creates a brand new, super-detailed prompt for the LLM.

-

Final Generation: Finally, this enriched prompt is handed to the LLM. With all the relevant context right there, the model doesn't have to guess. It synthesizes a precise, fact-based answer grounded in the information you provided.

Actionable Strategies for High-Fidelity Retrieval

A RAG system is only as good as the information it retrieves. Simple as that.

If your retriever pulls irrelevant, noisy, or fragmented context, your LLM will spit out an equally flawed answer—no matter how powerful the model is. Moving from a basic prototype to a production-grade system means getting obsessive about optimizing this retrieval step for maximum fidelity.

This isn't about tweaking default settings. It's about implementing deliberate, battle-tested strategies to ensure every piece of context passed to the generator is dense with relevant information and directly addresses the user's query.

Fortunately, there are several powerful techniques that can dramatically improve the precision of your retrieval pipeline.

Think of it like this: high-fidelity retrieval is about using the right tools to zero in on the exact piece of information you need from a vast library of knowledge.

Fine-Tuning Your Chunking Strategy

How you split your documents—your chunking strategy—is the absolute foundation of effective retrieval. A one-size-fits-all approach just doesn't cut it. You have to match your method to your content's structure and the kinds of questions you expect users to ask.

This is so critical that we’ve dedicated an entire article to exploring different chunking strategies for RAG.

Here are a few advanced methods to get you started:

- Semantic Chunking: Instead of splitting text by a fixed number of characters, this method uses an embedding model to find natural semantic boundaries. It groups sentences that are conceptually related, creating chunks that represent complete ideas. The result? A massive improvement in retrieval relevance.

- Agentic Chunking: This is a more dynamic, emerging approach where an LLM acts as a reasoning agent to decide how to break down a document. The agent might create overlapping chunks of different sizes—a short summary chunk, a medium paragraph chunk, and a larger multi-paragraph chunk—all referencing the same core information to provide context at multiple resolutions.

- Optimizing Chunk Size and Overlap: Finding the ideal chunk size is a balancing act. Small chunks offer precision but can miss broader context. Large chunks capture more context but might introduce noise. Experiment with different sizes and use a small overlap between chunks (say, 1-2 sentences) to ensure related ideas aren't severed by an arbitrary split.

Enriching Your Data with Metadata

Vectors are great for capturing what text means, but they don't tell the whole story. Attaching rich metadata to each chunk before embedding it unlocks a new dimension of filtering and precision that pure vector search can't touch.

Think of metadata as descriptive tags on your data. You could tag each chunk with things like:

- Source Document:

{"source": "Q3_Financial_Report_2024.pdf"} - Date Created:

{"created_date": "2024-10-26"} - Chapter or Section:

{"section_title": "Risk Factors"} - Keywords:

{"keywords": ["revenue", "forecast", "growth"]} - Generated Summary: A short, LLM-generated summary of the chunk itself.

This lets you perform a hybrid search. You can first filter your documents based on metadata (e.g., "only search documents from Q3 2024") and then run the semantic vector search on that much smaller, more relevant subset. It’s faster, cheaper, and way more accurate.

By combining metadata filtering with semantic search, you are essentially giving your RAG system two powerful ways to find information: one based on what the text is (its metadata) and another based on what it means (its vector).

Implementing Advanced Retrieval Architectures

Once your data is properly chunked and enriched, you can deploy more sophisticated architectural patterns to take retrieval quality to the next level. These methods go beyond a simple vector search to create a more robust and discerning system.

Hybrid Search

Hybrid search combines the strengths of old-school keyword search (like BM25) with modern semantic search. Keyword search is fantastic for finding specific terms, acronyms, or product codes that semantic search might gloss over. On the other hand, semantic search is brilliant at understanding user intent and finding conceptually related information.

By running both searches in parallel and intelligently fusing the results, you get a system that’s more resilient and comprehensive. It's truly the best of both worlds.

Using Reranking Models

A standard retriever might return the top 20 potentially relevant chunks, but let's be honest—they aren't all equally useful. A reranking model is a lightweight, secondary model whose only job is to take that initial list of candidates and reorder them based on their true relevance to the query.

This extra step is incredibly effective. The reranker can use more horsepower to analyze the query-chunk pairs, pushing the absolute best matches right to the top. This ensures the context fed to the LLM is of the highest possible quality, which directly improves the final answer.

This level of precision is especially critical in high-stakes fields. For instance, in the competitive RAG market, the healthcare and life sciences sector is projected to claim a massive 37.65% market share through 2035. This growth is driven by a desperate need for AI that can analyze complex medical data with extreme accuracy and privacy. As you can explore in more detail about the RAG market forecast, tools that enable precise chunking and seamless data integration are essential for winning in these demanding areas.

Choosing the Right Tools for Your RAG Tech Stack

Building a production-ready RAG system is a bit like assembling a high-performance engine. Every single component has to be chosen carefully to work in perfect sync. Your RAG tech stack isn’t one big piece of software; it’s a collection of specialized tools, each playing a critical role in turning raw data into accurate, context-aware answers.

Making the right calls here will directly impact your system’s performance, how well it scales, and what it costs to run. Let’s break down the essential layers of a modern RAG stack and look at the top contenders in each category, so you can build a decision-making framework that fits your project.

<iframe width="100%" style="aspect-ratio: 16 / 9;" src="https://www.youtube.com/embed/Aw7iQjKAX2k" frameborder="0" allow="autoplay; encrypted-media" allowfullscreen></iframe>Data Preparation and Chunking Tools

It all starts with your data. Before any embedding or retrieval can happen, your documents need to be cleaned up, parsed, and chunked intelligently. You could write custom scripts, of course, but dedicated tools can seriously speed up this foundational step and offer more sophisticated strategies right out of the box.

Tools like ChunkForge, for example, give you a visual studio for this exact job. You can experiment with different chunking methods—from simple fixed-size splits to complex semantic chunking—and see the results instantly. That level of control is vital for optimizing the quality of the "context snippets" your system will eventually find.

Selecting Your Embedding Model

The embedding model is the heart of your retrieval system. Think of it as the component responsible for converting text into a numerical format that captures its meaning. The quality of these embeddings has a massive downstream effect on the relevance of your search results.

You’ve got a few great options, each with its own pros and cons:

- Proprietary Models (OpenAI, Cohere): These services deliver state-of-the-art performance and are incredibly easy to plug into your system via an API. OpenAI's

text-embedding-3series is a popular go-to, known for its strong benchmark performance. The trade-off? Cost can add up at scale, and you have to be comfortable sending your data to a third-party service. - Open-Source Models (Sentence-Transformers, BGE): Models hosted on platforms like Hugging Face give you total control. You run them on your own infrastructure, which is great for data privacy and can lower costs. This route requires more technical lift to set up but gives you maximum flexibility.

Choosing a Vector Database

Your vector database is the specialized library that stores and indexes all those embeddings for lightning-fast retrieval. This is a critical infrastructure decision that dictates how well your system will scale and how much work it takes to manage.

A vector database is more than just storage; it’s a high-speed search engine for meaning. The choice you make here will define how quickly and efficiently your RAG system can find relevant context, especially as your knowledge base grows from thousands to billions of documents.

With that in mind, let’s compare some of the most popular options available today.

Comparing Popular Vector Database Options

Each of the leading vector databases is built with a different user or use case in mind. Whether you need a fully managed serverless option or a highly scalable open-source solution, there's a good fit for your project.

| Database | Best For | Key Features | Hosting Model |

|---|---|---|---|

| Pinecone | Teams needing a fully managed, high-performance solution with minimal setup. | Easy-to-use API, low-latency queries, serverless architecture. | Managed Cloud Service |

| Weaviate | Projects requiring flexible deployment and built-in hybrid search capabilities. | Open-source, keyword/vector hybrid search, GraphQL API. | Self-Hosted or Managed |

| Chroma | Developers and researchers building local prototypes or smaller-scale applications. | Open-source, runs in-memory or on-disk, simple Python integration. | Self-Hosted (Primarily) |

| Milvus | Enterprises needing a highly scalable, open-source solution for massive datasets. | Distributed architecture, high availability, advanced indexing options. | Self-Hosted or Managed |

This is just a starting point, but it highlights how different platforms like Pinecone, Weaviate, Chroma, and Milvus cater to very different needs, from quick prototypes to massive, enterprise-grade deployments.

Orchestration Frameworks That Tie It All Together

Finally, you need a framework to connect all these pieces into a working pipeline. Orchestration libraries like LangChain and LlamaIndex provide the "plumbing" for your RAG system. They offer pre-built components for data loading, embedding, retrieval, and generation, saving you from writing tons of boilerplate code.

LangChain is a powerful, general-purpose framework for building all kinds of LLM applications, giving you immense flexibility. LlamaIndex, on the other hand, is laser-focused on RAG, offering deep features for indexing and advanced retrieval strategies. Some developers also turn to other powerful tools; you can explore our guide on building with the Haystack search engine to see how different frameworks approach these challenges.

At the end of the day, the "best" tech stack is the one that aligns with your team's skills, budget, and performance goals. By carefully evaluating your options at each layer, you can assemble a robust and efficient pipeline capable of delivering truly intelligent and reliable results.

How to Evaluate and Monitor Your RAG System

Launching your RAG pipeline is a huge step, but it’s just the beginning. A system that works perfectly today can start to drift tomorrow as your data changes or users find new ways to interact with it. To build a RAG application that lasts, you need a rock-solid plan for evaluation and constant monitoring.

Without it, you’re flying blind. You have no real way of knowing if your retriever is pulling the right context, if the generator is hallucinating, or if subtle changes in your data are slowly degrading performance. Good evaluation is what separates a prototype from a production-ready RAG system.

Defining Your Key Evaluation Metrics

A great RAG system needs to nail two things: finding the right information and then using it to write a good answer. That means you need to track metrics for both the retriever and the generator.

Retrieval Metrics

These metrics tell you how good your system is at finding the right documents from your knowledge base.

- Hit Rate: This one is simple but powerful. It asks: did the retrieved documents actually contain the information needed to answer the question? If the answer is found anywhere in the top

kchunks you retrieved, you have a "hit." - Mean Reciprocal Rank (MRR): MRR takes it a step further. It doesn't just care if the right info was found, but where it ranked in the results. A high MRR means the most relevant documents are consistently showing up right at the top of the list—which is exactly what you want.

Generation Metrics

Once you’ve got the context, you need to measure the quality of the final answer the LLM produces.

- Faithfulness: Does the answer actually stick to the facts in the provided context? This is your main defense against hallucinations. A high faithfulness score means your model isn't just making things up or contradicting the source material.

- Answer Relevancy: How well does the final output address the user’s original question? An answer can be factually correct based on the context but still completely miss the point of what the user was asking. This metric keeps your system on track.

Think of it this way: retrieval metrics ensure you've found the right ingredients, while generation metrics confirm you've baked a good cake. You absolutely need both for a successful outcome.

Establishing an Evaluation Framework

To actually track these metrics, you need a structured process. This usually starts with building a "golden dataset"—a hand-curated list of questions and ideal answers that represent the kinds of things you expect users to ask.

Your evaluation pipeline runs these questions through your RAG system, then automatically scores the outputs against your golden dataset using the metrics we just covered. This gives you a repeatable, objective benchmark. Now, when you experiment with a new chunking strategy or a different embedding model, you can rerun the evaluation and get hard data on whether your changes made things better or worse.

Tools like Ragas, ARES, and TruLens were built for this. They provide the scaffolding to score faithfulness, answer relevancy, and retrieval quality, turning what could be a messy evaluation process into a streamlined workflow. This lets you graduate from subjective "it feels better" assessments to data-driven optimization.

The final, non-negotiable step is ongoing monitoring in production. By logging user queries, the context you retrieve, and the answers you generate, you can keep a constant pulse on performance. This helps you spot problems like data drift or new failure patterns before they affect too many users. It creates a feedback loop that transforms your RAG system from a static tool into one that learns and improves over time.

Security and Privacy in RAG Applications

When your RAG system starts handling sensitive or proprietary data, security stops being a feature—it becomes the very foundation of trust. Every time you send data to a third-party API for embedding or generation, you're opening a potential door for data leaks. Your confidential information is literally traveling outside your own secure walls.

This means you have to think about architecting a secure pipeline from end to end. It's about safeguarding data both when it's moving and when it's sitting still. Every single step, from the moment a document is ingested to the final answer the LLM produces, needs to happen in a controlled environment.

The Strategic Value of Self-Hosting

For organizations that need maximum control over their data, self-hosting the core components of a RAG system is the gold standard. It's really the only way to be certain. By deploying your own embedding models, vector database, and even the LLM on your own servers or private cloud, you guarantee that sensitive documents never leave your sight.

Self-hosting transforms your data privacy model from relying on third-party promises to enforcing your own security policies. It's the most effective way to eliminate the risk of external data exposure.

This approach isn't just a good idea; it's often a requirement. For businesses in tightly regulated fields like healthcare, finance, or legal services, compliance mandates strict data residency and privacy controls that make self-hosting a necessity.

Sure, managed services offer convenience, but they almost always come with a security trade-off. Deciding where you stand on that balance is a critical architectural choice. Tools designed for self-hosting, like many open-source models and frameworks, give you the power to build a robust RAG system without ever compromising your security posture. To see how central data control is to our own philosophy, you can review our commitment to protecting user data and privacy.

Ultimately, making an informed choice between convenience and control lets you build a system that aligns perfectly with your organization's security and compliance needs.

Common Questions About RAG

Even with a solid plan, you're bound to hit a few questions when you start building a RAG system. Let's tackle some of the most common ones we hear from engineers and product teams.

RAG vs. Fine-Tuning: Which One Do I Need?

This is a classic. The short answer is: they’re not replacements for each other. They’re different tools for different jobs, and they often work best together.

Fine-tuning is about changing the model's behavior. You’re teaching it a new skill, a specific tone of voice, or how to understand niche language, like legal or medical terminology.

RAG, on the other hand, is about giving the model fresh information at the moment it needs it. It injects real-time, external facts into the prompt without changing the model itself.

Think of it this way: you might fine-tune a model to think like a lawyer, then use RAG to hand it the specific details of a new case file. Combine them, and you get a model that not only understands legal jargon but can also reason about up-to-the-minute information.

The Bottom Line: Use fine-tuning to teach a model how to think and RAG to give it the right information to think about.

How Do I Deal With Complex Documents like PDFs and Tables?

Ah, the messy reality of real-world data. If you’ve ever tried to chunk a PDF full of tables, charts, and weird layouts, you know that standard methods fall apart fast. You end up with scrambled, useless context.

This isn’t a dead end—you just need a smarter approach to parsing your documents before they ever get chunked:

- Intelligent Parsers: Don't just extract raw text. Use tools that understand a document's structure. You need something that can correctly pull text from tables, identify headers, and follow column layouts.

- Chunking by Section: Instead of splitting a document every 500 characters, break it apart based on its logical structure—chapters, headings, or sections. This keeps related ideas together.

- Multi-Modal Models: If your documents contain important images or diagrams, a text-only approach won't cut it. Look into multi-modal models that can create embeddings from both text and visuals to capture the complete picture.

Is Running a RAG System Expensive?

It depends entirely on the path you choose.

Using managed APIs for your embedding model and generator is definitely the quickest way to get started. But as your query volume scales, those per-token costs can add up surprisingly fast. For high-throughput applications, this route can become a significant operational expense.

The alternative is a self-hosted, open-source stack. This approach requires more upfront work in setup and ongoing maintenance, but your costs become much more predictable and are often dramatically lower in the long run. You're trading convenience for control and cost-effectiveness.

Ready to build a RAG pipeline that actually performs? ChunkForge gives you a powerful visual studio to prepare your documents with the precision they deserve. Experiment with advanced chunking strategies, enrich your data with deep metadata, and export production-ready assets in minutes. Start your free trial today at https://chunkforge.com and see the difference for yourself.