What Is Data Parsing And How It Enables Better RAG Systems

Learn what is data parsing and how it transforms raw data into a structured format, enabling AI and RAG systems to deliver more accurate and reliable results.

At its core, data parsing is the art of taking messy, unstructured information and transforming it into a clean, organized format. This conversion is what makes data actually usable for software, databases, and most importantly, for enabling superior retrieval in today's Retrieval-Augmented Generation (RAG) systems.

Translating Raw Data Into Retrieval-Ready Knowledge

Think of a data parser as a skilled translator for your data. A tangled PDF report, a sprawling website, or a dense legal contract speaks a language machines just don't understand natively. The parser steps in, breaking down that chaotic information and reassembling it into a logical structure that a RAG system can use to find precise information.

This translation is the critical first step in building a high-performance RAG pipeline. For these systems, the quality of parsing isn't just a nice-to-have; it's a non-negotiable requirement. A RAG model's ability to retrieve the right information to generate a smart, context-aware answer depends entirely on how well its knowledge base was parsed and structured.

Data parsing is the bridge between raw, human-readable documents and structured, machine-readable knowledge. Without it, your RAG system is effectively trying to find a needle in a haystack of glued-together pages.

To see just how dramatic this change is, let's look at a simple before-and-after.

Data Before and After Parsing

| Data State | Example | Machine Readability |

|---|---|---|

| Raw (Before) | Invoice #1234, Due: 2024-12-01, Total: $599.99 | Very Low |

| Parsed (After) | {"invoice_id": 1234, "due_date": "2024-12-01", "total": 599.99} | High |

The raw string is just a jumble of characters to a computer. After parsing, each piece of information is clearly labeled and structured, making it instantly queryable and useful for targeted retrieval.

The Bedrock of RAG Performance

This idea isn't new—it has deep roots in the history of computing. Back in the 1880s, Herman Hollerith’s tabulating machine "parsed" holes in punch cards to automate the US Census, cutting a task that took years down to just a few months.

Today, the goal is the same, but the applications are worlds apart. Great parsing directly boosts RAG performance by:

- Improving Retrieval Accuracy: Clean, well-defined data helps a RAG system pinpoint the exact chunk of information needed to answer a query.

- Reducing AI Hallucinations: When an AI gets precise, unambiguous context from its retrieval step, it's far less likely to make things up or "hallucinate" incorrect answers.

- Enabling Deeper Insights: Parsing can pull out not just text, but also tables, lists, and the hidden relationships between different concepts, creating a much richer knowledge source for retrieval.

This whole process is a cornerstone of what we now call intelligent document processing—a field dedicated to extracting truly meaningful information from complex documents. By getting data parsing right, you're building a reliable foundation for smarter, more accurate, and more powerful RAG applications.

Understanding Structure Versus Meaning In Data

Great data parsing isn't a single, brute-force action. It's a two-step dance, much like how our own brains make sense of the world. First, we see the structure of something, and then we understand the meaning held within it.

For a Retrieval-Augmented Generation (RAG) system, nailing both parts of this process is the difference between a vague keyword match and a precise, context-rich retrieval that leads to a helpful answer.

The first layer is syntactic parsing. Think of it as creating an architectural blueprint for a document. This is all about identifying the grammar and layout—what’s a headline, what’s a paragraph, and what's a list item. It’s about recognizing the rules of the format, not the content itself. This ensures the chunks fed into a vector database are coherent and self-contained.

The Foundation of Syntactic Parsing

Syntactic parsing is what gives us clean, organized data to work with. It takes a raw mess of text or code and organizes it into a neat hierarchy, often called a parse tree. The ideas behind this aren't new; they have deep roots in computer science.

As far back as 1961, early parsing algorithms could already distinguish between simple state-driven parsers and more powerful stack-driven parsers, which used a stack to handle complex grammar rules. This was a fundamental leap that set the stage for how modern systems analyze everything from programming languages to PDF layouts. It's fascinating to see how early computer science discoveries still power today's AI tools.

This structural map provides the well-formed chunks of information a RAG system needs to get started. But just knowing the layout isn't enough to enable truly intelligent retrieval.

Diving Deeper with Semantic Parsing

The second, more advanced layer is semantic parsing. If syntactic parsing built the blueprint, semantic parsing is what furnishes the rooms with meaning. It goes beyond just identifying a "paragraph" and starts to understand what the paragraph is actually about.

Semantic parsing is the bridge from structure to insight. It enriches data by identifying the 'who, what, and where'—recognizing named entities, concepts, and the relationships connecting them within the text.

For a RAG system, this enrichment is absolutely critical. It allows the retrieval model to find information based on its conceptual understanding, not just because two keywords happened to be near each other. This is the key to answering nuanced questions.

This is where the real magic happens for retrieval:

- Entity Recognition: It flags names of people, companies, locations, and dates, turning them into structured metadata for filtering.

- Relationship Extraction: It connects the dots, understanding that "Apple Inc., headquartered in Cupertino," links a specific company to a specific place.

- Intent Analysis: It can even start to figure out the purpose or sentiment of a sentence or an entire section.

By combining both syntactic and semantic analysis, we turn a flat document into a dynamic source of knowledge. A great example of this in action is understanding semantic chunking, a key technique that leans heavily on this deep comprehension. This dual approach ensures the chunks we feed to a vector database are not just well-formed, but also packed with the rich context an LLM needs for an intelligent retrieval and generation process.

How Data Parsing Actually Works

So, how do we get from a messy, complex document to the clean, AI-ready data needed for a RAG system? The quality of this breakdown is everything—it directly controls how accurately your system can find information later. Let's pull back the curtain on the core techniques that turn raw files into a structured knowledge base.

The journey often starts with one of the most powerful tools in any developer's kit: Regular Expressions, or Regex.

Think of Regex as a super-powered "find and replace" tool. It uses a special, compact syntax to define a search pattern, letting you zero in on and pull out specific pieces of information with incredible precision.

For instance, maybe your RAG system needs to find every invoice number formatted like INV-2024-XXXX. A simple Regex pattern can rip through thousands of documents and snatch only those strings, leaving everything else behind. It's a game-changer for pulling structured bits of data from unstructured text.

Navigating With Document Object Models

While Regex is a wizard with specific text patterns, it gets lost when trying to understand the overall layout of a structured file like an HTML webpage or an XML document. That's where DOM parsing steps in.

The Document Object Model (DOM) treats a document not as a flat wall of text, but as a tree. Every element—a heading, a paragraph, a table—is a "node" on that tree, connected in a clear hierarchy.

Parsing the DOM is like navigating a family tree. You can tell your program to move from a parent node to a specific child node to grab exactly what you need. For example, you could instruct a parser to "find the second paragraph inside the section with the ID 'summary'." This is way more reliable than Regex for web data because it understands the document's structure, so it won't break if someone tweaks the wording.

DOM parsing gives a RAG system spatial awareness within a document. It doesn't just see a wall of text; it sees headlines, lists, and tables, allowing it to preserve the original context and hierarchy of the information.

This structural understanding is critical for creating clean, logical data chunks that make sense in a vector database and improve retrieval relevance.

Understanding Language With NLP

But what about truly unstructured text, like the dense paragraphs in a legal contract or a scientific paper? For that, we need to bring in the heavy hitters.

This is the world of Natural Language Processing (NLP), a field of AI dedicated to helping computers make sense of human language. NLP techniques are what give us the deep, semantic comprehension needed for top-tier retrieval.

Two foundational NLP techniques for parsing are:

- Tokenization: This is the first step, breaking down long sentences into smaller units called tokens (usually words or pieces of words). It’s like deconstructing a sentence into its individual Lego bricks before you can figure out what it builds.

- Named Entity Recognition (NER): Think of NER as a semantic highlighter. It scans the text and automatically identifies and categorizes key entities—names of people, organizations, locations, dates, and even money. This adds a rich layer of metadata, making the content far more searchable and filterable during retrieval.

By using these NLP methods, we go way beyond just cutting and pasting text. We start building a rich, interconnected map of the concepts inside a document. For a RAG system, this means it can retrieve information based on meaning and context, not just simple keyword matches. And that leads to dramatically better answers.

Building a High-Performance RAG Pipeline

Knowing the theory behind data parsing is one thing. Actually putting it to work to build a high-performance Retrieval-Augmented Generation (RAG) pipeline is a whole other beast. The real goal is to take something messy—like a dense, 100-page financial report—and turn it into a perfectly structured format that enables precise retrieval.

Frankly, this meticulous prep work is what separates a frustrating, inaccurate RAG system from one you can actually rely on.

<iframe width="100%" style="aspect-ratio: 16 / 9;" src="https://www.youtube.com/embed/qN_2fnOPY-M" frameborder="0" allow="autoplay; encrypted-media" allowfullscreen></iframe>Let's break the process down into three key stages. Each one builds on the last, creating rich, context-aware data that will make your RAG system's retrieval component shine.

Stage 1: Text Extraction

The journey always starts with the most fundamental step: text extraction. Raw documents, especially PDFs, are a jumble of text, images, tables, and complex layouts. The first challenge is just to get the raw text out of its container without losing information or ending up with a bunch of garbled characters.

You can't skip corners here. A mistake at this stage—like mangled text or a missed section—poisons the entire pipeline. It cascades all the way down and corrupts the final result. Using high-quality extraction tools is non-negotiable if you want to preserve the document's integrity from the get-go.

Stage 2: Intelligent Chunking

Once you have the raw text, you move on to intelligent chunking. This is a direct application of syntactic parsing, where we break the document down into smaller, more logical pieces. The aim isn't just to create random blocks of text, but to produce chunks that represent complete, contextually whole ideas.

The quality of your chunks directly determines the quality of your RAG system's retrieval. Poor chunking leads to fragmented context, forcing the LLM to guess. Intelligent chunking provides complete, coherent thoughts for the model to work with.

For instance, instead of clumsily splitting a paragraph in half, a smart chunking strategy would recognize section headings, lists, and tables as natural breaking points. This ensures each piece makes sense on its own, which is absolutely critical for creating meaningful vector embeddings later. This step often relies on a mix of techniques, from simple rule-based methods to more sophisticated NLP models.

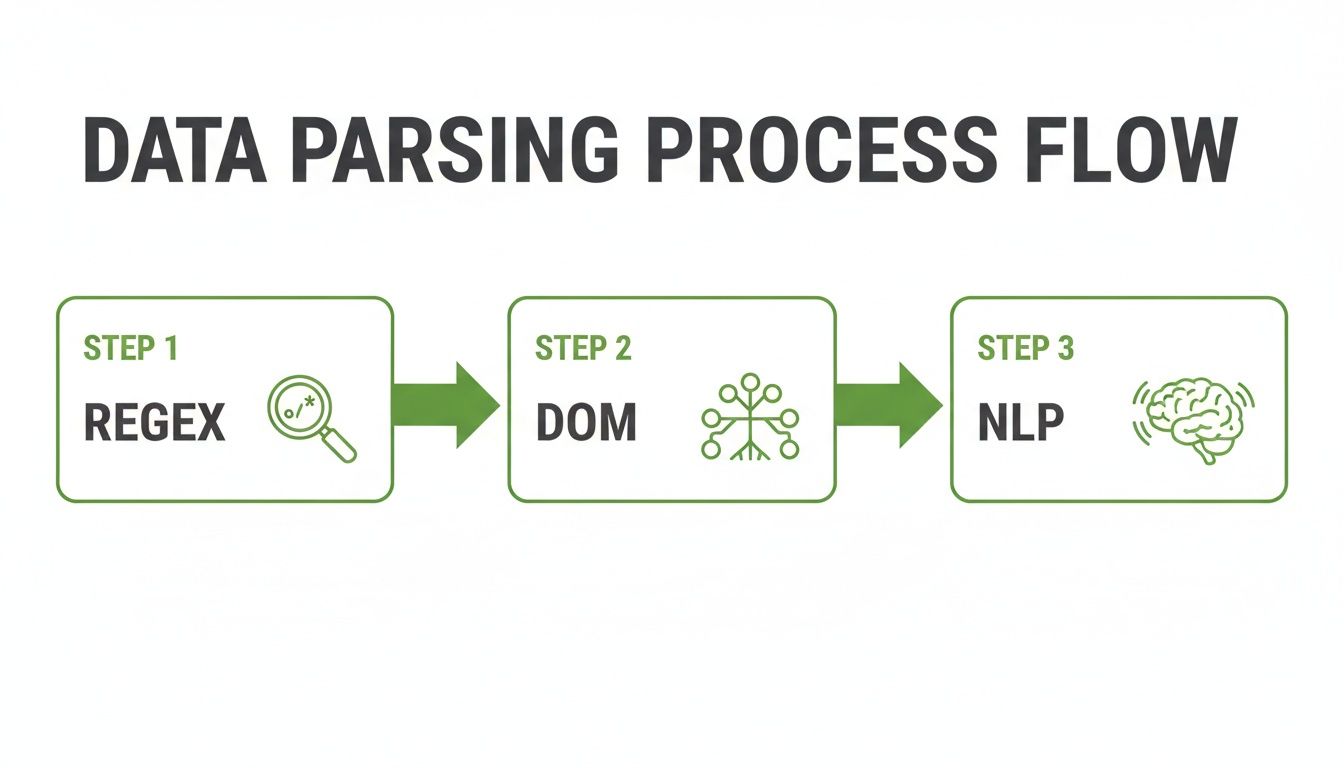

As you can see below, different parsing methods are often layered together in a processing pipeline to achieve this.

This flow shows how you might start with simple pattern matching (Regex), move to structural analysis (DOM), and finish with deep comprehension (NLP).

Stage 3: Semantic Enrichment

The final, and most advanced, stage is semantic enrichment. This is where we go beyond just structure and start layering crucial metadata onto each chunk. We're essentially transforming a simple piece of text into a rich, queryable data object. This is where semantic parsing techniques come in to identify key entities and the relationships between them.

This stage is all about adding the "who, what, and where" to your data to supercharge retrieval:

- Entity Recognition: Automatically flagging names of companies, products, people, and locations, which can be used for metadata filtering.

- Keyword Extraction: Pinpointing the most important terms and concepts within each chunk.

- Summarization: Generating a quick, concise summary for each chunk to give a high-level overview or be embedded separately for improved search.

By moving through extraction, chunking, and enrichment, you're not just chopping up a document—you're engineering superior data for your vector embeddings. This allows your RAG system to retrieve highly relevant context, which in turn dramatically improves the accuracy and reliability of its answers.

7. Choosing The Right Tools For The Job

A great data parsing strategy lives and dies by the tools you choose. Your toolkit has to match the complexity of your data and the specific demands of your AI application—especially for a high-stakes Retrieval-Augmented Generation (RAG) system where context is everything.

The Everyday Workhorses

For general-purpose tasks like scraping web pages or plucking simple patterns from text, you don't always need a cannon to kill a mosquito. Foundational Python libraries are often the perfect starting point. They're lightweight, flexible, and surprisingly powerful for that first-pass data cleanup.

- Beautiful Soup: The go-to for wrangling HTML and XML. It’s brilliant at navigating the Document Object Model (DOM) to pull out text, tables, and other goodies from web pages with surgical precision.

- Regex (

relibrary): Your secret weapon for pattern matching. When you need to find and extract consistently formatted data—think email addresses, phone numbers, or specific IDs buried in a block of text—Regex is ruthlessly efficient.

NLP Libraries: Moving From Structure to Meaning

But what happens when your goal shifts from just extracting text to truly understanding it? That's when you need to bring in the specialists.

Natural Language Processing (NLP) libraries provide the heavy-duty algorithms for semantic analysis. This is absolutely critical for enriching your data chunks with metadata before they are loaded into a vector database for retrieval.

The choice of parsing tool directly impacts the quality of retrieval in a RAG system. A simple tool might extract text, but a sophisticated one extracts meaning, context, and relationships—the very ingredients an LLM needs for accurate answers.

spaCy and NLTK are the two titans in this space. They offer robust tools for tokenization, named entity recognition (NER), and part-of-speech tagging. This lets you wrap your raw text in a rich layer of semantic metadata, making your data far more discoverable for a RAG system.

Specialized Tools for Gnarly RAG Pipelines

Modern AI stacks, especially those built for RAG, have to deal with the messy reality of complex file formats. We're talking about PDFs, PowerPoint slides, and Word documents—files that are a chaotic mix of text, tables, headers, and images.

General-purpose libraries just weren't built for that.

This is where a new class of specialized tools comes in. Platforms like LlamaParse and Unstructured.io are purpose-built for this exact challenge. They’re designed to deconstruct these complex files, preserving the original layout and pulling out tables and other elements as clean, structured data.

Knowing how to use a Python PDF reader is a great start, but these tools take it to another level by interpreting the document's visual and logical flow.

For RAG, this is a game-changer. It means the chunks you create aren’t just raw text; they're complete, context-rich snippets that preserve the document's original intent, leading to far better retrieval outcomes.

Choosing the Right Data Parsing Tool

With so many options, picking the right one can feel overwhelming. This table breaks down some of the most popular libraries and platforms to help you decide.

| Tool/Library | Primary Use Case | Best For | Key Feature |

|---|---|---|---|

Regex (re) | Simple pattern matching | Extracting structured strings like emails or IDs from unstructured text. | Lightweight and incredibly fast for well-defined patterns. |

| Beautiful Soup | Web scraping & HTML/XML parsing | Pulling specific content (text, tables, links) from web pages. | Excellent at navigating the DOM tree of a web page. |

| spaCy / NLTK | NLP and semantic analysis | Enriching text with metadata (entities, parts of speech) for better retrieval. | Pre-trained models for sophisticated linguistic analysis. |

| Unstructured.io | Complex document parsing | Handling messy, multi-modal files like PDFs, PPTX, and DOCX for RAG. | Reconstructs document elements (tables, titles) into clean formats. |

| LlamaParse | Advanced RAG document prep | Deeply parsing PDFs with complex layouts for high-fidelity RAG. | Preserves hierarchical structure and relationships between elements. |

Ultimately, the tool you choose will have the single biggest impact on your pipeline's success. Whether it’s a quick Regex script or a full-blown document intelligence platform, making the right call upfront will save you countless headaches down the road.

Avoiding Common Data Parsing Pitfalls

Building a data parsing pipeline that works on a few clean test files is one thing. Building one that holds up in a production RAG system is a whole different ballgame. The real world is messy, and your parser needs to be ready for anything.

A parser that's too rigid will shatter the moment it encounters a corrupted PDF, a webpage with busted HTML, or a document with truly bizarre formatting. That single point of failure can bring your entire ingestion process to a grinding halt. The goal is to build for resilience—your system should be able to log the error, quarantine the problem file, and keep on chugging through the rest of the queue.

Optimizing Performance and Throughput

Next up is the ever-present challenge of performance bottlenecks. Parsing millions of documents is a heavy lift, and inefficient code—especially slow Regex patterns or unoptimized NLP models—can slow your data ingestion to a painful crawl.

To keep things moving, you need to think about maximizing throughput from day one. A few strategies can make a massive difference:

- Batch Processing: Don't process documents one by one. Group them into batches and run them in parallel to put all that modern hardware to good use.

- Lazy Loading: Avoid loading everything into memory at once. A smarter approach is to load and parse data only when it's actually needed.

- Profile Your Code: You can't fix what you can't see. Use profiling tools to pinpoint the exact functions that are eating up the most time and memory, then optimize them.

The real test of your system is how gracefully it scales. A 10x increase in document volume shouldn't cause a 10x increase in processing time. With the right optimizations, your system can handle exponential growth without needing constant babysitting.

Maintaining Trust with Data Provenance

This might be the most important pitfall to avoid: losing data provenance. For any RAG system that you want people to actually trust, knowing exactly where each piece of information came from isn't just a nice-to-have, it's a requirement.

Without a clear link back to the source, you lose all traceability. You can't verify the AI’s answers, and your users can't trust them.

This means every single chunk you send to your vector database must be tagged with metadata. We're talking source document, page number, and maybe even its coordinates on the page. This linkage is the bedrock of a trustworthy and explainable RAG system, giving users a clear path to validate any response right back to the original text.

Common Questions About Data Parsing

We get a lot of questions about data parsing and how it fits into the modern AI stack. Here are a few of the most common ones we hear.

What's The Difference Between Data Parsing And Data Scraping?

It’s a classic “get vs. understand” distinction.

Data scraping is the first part of the job—it's the act of pulling raw, often messy, data from a source like a website or a PDF. Think of it like ripping pages out of a book. You have the content, but it's just a jumble of text.

Data parsing is the critical next step. This is where you take that raw data and give it structure and meaning. Parsing is what turns that jumble of text into a clean, organized, and usable format that is ready for a RAG pipeline.

In short, scraping gets you the information; parsing makes that information useful for retrieval.

Can I Use AI For Parsing Itself?

Absolutely, and frankly, it's becoming the standard for complex documents. Modern LLMs are fantastic at what you might call "intelligent parsing."

Instead of writing rigid, rule-based scripts that break the moment a document layout changes, you can use a model to infer the structure. It can look at a messy invoice or a dense legal contract and figure out what's a header, what's a table, and what's a key clause. This approach is far more flexible and robust, especially when you're dealing with a wide variety of document formats for your RAG system.

Better parsing creates clean, contextually rich data chunks for your vector database. When a user asks a question, the RAG system retrieves more precise and relevant information, dramatically improving the quality of its response.

How Does Better Parsing Reduce Hallucinations In RAG?

High-quality parsing is one of the most effective weapons against AI hallucinations. It's all about providing the model with clean, unambiguous facts during the retrieval step.

When you parse documents well, you create well-defined, contextually complete chunks of information to load into your vector database.

When a RAG system retrieves this precise, well-structured context, it gives the LLM everything it needs to form an answer based on solid ground. This drastically reduces the model's need to guess, invent facts, or "hallucinate" to fill in the gaps left by poorly processed data.

Ready to turn messy documents into retrieval-ready assets? ChunkForge provides a visual studio to parse, chunk, and enrich your data for high-performance RAG pipelines. Start your free trial at https://chunkforge.com.