Generate PDF With Python for Smarter RAG Retrieval

Learn how to generate PDF with Python using modern libraries. This guide offers actionable code and strategies for building AI and RAG pipelines.

When building a robust AI pipeline, the ability to generate a PDF with Python is more than a convenience—it's a core component for creating high-quality, retrievable data. Libraries like ReportLab offer granular control for building structured documents from scratch, while tools like Playwright excel at high-fidelity HTML-to-PDF conversions. The key is that by programmatically creating PDFs, you ensure they are structured, machine-readable, and optimized for Retrieval-Augmented Generation (RAG) from the moment they're created.

Why Python PDF Generation Is Critical for RAG

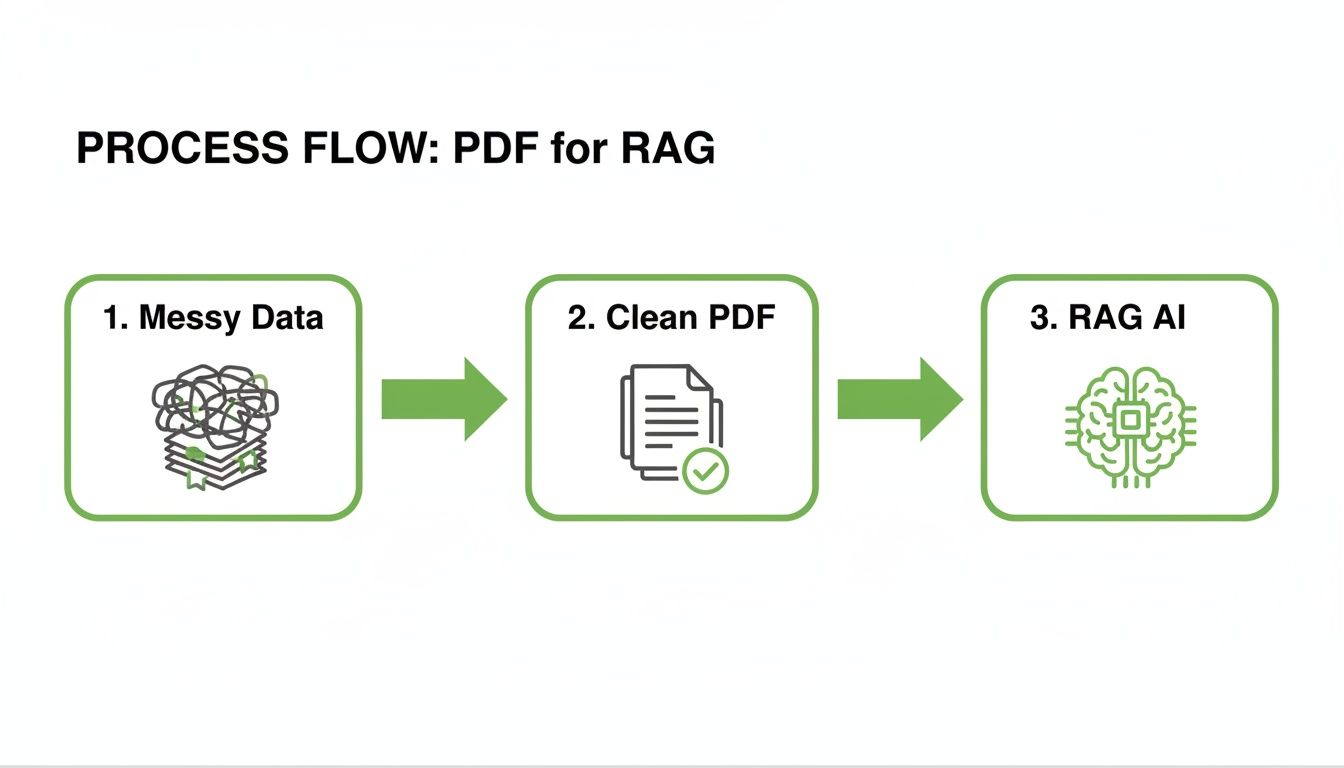

For any AI system using RAG, the quality of source documents directly dictates the quality of its output. It's a classic case of "garbage in, garbage out."

Feed your RAG pipeline a diet of poorly structured, scanned, or inconsistently formatted PDFs, and it will inevitably return unreliable, irrelevant, or factually incorrect answers. The retrieval mechanism simply cannot find the precise information it needs within the noise.

This is where generating PDFs with Python becomes a strategic advantage. Instead of wrestling with messy documents as a cleanup task, you engineer them correctly from the start. This proactive approach ensures every document is born ready for accurate chunking, embedding, and retrieval by your AI system.

The Problem with Unstructured Documents

Most AI projects start with a collection of existing PDFs from varied sources—scanned invoices, exported reports, and web pages saved hastily. These documents are often riddled with issues that cripple a RAG system's retrieval capabilities:

- Inconsistent Formatting: Unpredictable layouts, mixed fonts, and multi-column text confuse parsing algorithms, leading to jumbled or nonsensical data chunks.

- Hidden or Missing Text: Critical information is often trapped inside images or vector graphics, making it invisible to text extractors and leaving significant gaps in your AI's knowledge base.

- Lost Context: The crucial relationships between headers, tables, and paragraphs are often lost during extraction. Without this structural context, the AI cannot grasp the document's true meaning.

These problems directly sabotage the retrieval step of the RAG pipeline. If the system cannot accurately chunk and index the content, it has no hope of finding the right context to answer a user's query.

This process is about transforming raw data into a clean, structured source optimized for RAG retrieval.

By controlling the creation process, you guarantee the final document is optimized for AI ingestion, leading to more precise and reliable information retrieval.

Engineering for Retrieval Precision

When you generate a PDF with Python, you're not just making a file—you're engineering a data asset for retrieval. This control allows you to enforce a consistent, machine-readable structure that directly improves the performance of your RAG system.

By programmatically creating PDFs, you shift from data cleanup to data engineering. This is fundamental for building production-grade RAG systems. It treats the document as the first, most critical step in the AI pipeline, ensuring the retrieval mechanism can access clean, contextually rich information.

Choosing Your Python PDF Library for RAG Workflows

Picking the right tool is crucial for optimizing your documents for retrieval. Some libraries offer pixel-perfect control, while others are designed for converting web content. This table breaks down the leading options based on their utility for RAG systems.

| Library | Best For | Key Strengths | RAG Use Case |

|---|---|---|---|

| ReportLab | Building complex, structured documents from data. | Granular control over layout, text, and graphics. Enables consistent metadata. | Generating clean, structured reports or invoices with predictable formatting, ensuring reliable chunking for factual data retrieval. |

| FPDF2 (pyfpdf) | Simple, text-heavy document creation. | Lightweight and pure Python. Easy to get started. | Creating standardized, parsable documents like data exports or forms where clean text extraction is the primary goal. |

| WeasyPrint | High-fidelity HTML and CSS to PDF conversion. | Excellent CSS support. Preserves web page structure and semantics. | Converting well-structured HTML knowledge base articles into PDFs while maintaining headings (H1, H2) and tables for semantic chunking. |

| Playwright | Converting dynamic, JavaScript-heavy web pages. | Renders pages in a real browser engine for perfect fidelity. | Capturing live dashboards or web apps as PDFs, ensuring that all dynamically loaded content is included and searchable. |

The best choice depends on your source data. For structured data from a database, ReportLab or FPDF2 are ideal. For well-formatted HTML, WeasyPrint or Playwright are superior. A detailed comparison of top libraries can provide further insight into performance benchmarks.

Building Structured PDFs With ReportLab and FPDF2

When your goal is to generate a PDF from raw data—like database query results or API responses—low-level libraries like ReportLab and FPDF2 are your most powerful allies.

These tools give you complete control over the document structure. You can programmatically place every text element, draw every table line, and define every header. This is fundamentally different from converting an existing file; you are the architect, building a document optimized for machine interpretation from the ground up.

This level of control is a massive advantage for RAG pipelines. By defining the structure in code, you guarantee a consistent, predictable layout every time. This consistency makes downstream chunking and data extraction processes far more reliable and less prone to errors, leading to higher-quality embeddings and better retrieval.

Getting Started With FPDF2

FPDF2 is praised for its simplicity, making it an excellent choice for creating straightforward, text-centric documents. As a pure Python library with no external dependencies, it's easy to integrate into any workflow.

Imagine you need to generate daily reports from structured data. With FPDF2, you can script a process that lays out the information in a clean, multi-page format with consistent headers and footers. This ensures your parsing logic for the RAG pipeline doesn't have to account for layout variations.

Here’s a basic example for creating a report with a consistent structure:

from fpdf import FPDF

class PDF(FPDF):

def header(self):

# Add a logo for consistency

self.image('logo.png', 10, 8, 25)

self.set_font('Helvetica', 'B', 15)

# Center the title

self.cell(80)

self.cell(30, 10, 'Daily Sales Report', border=0, align='C')

# Add a line break for spacing

self.ln(20)

def footer(self):

# Position footer 1.5 cm from bottom

self.set_y(-15)

self.set_font('Helvetica', 'I', 8)

# Add page number

self.cell(0, 10, f'Page {self.page_no()}', 0, 0, 'C')

# Initialize the PDF

pdf = PDF()

pdf.add_page()

pdf.set_font('Times', '', 12)

# Add structured content

for i in range(1, 41):

pdf.cell(0, 10, f'Data point {i}: Details...', ln=True)

pdf.output('sales_report.pdf')

Using a class ensures every generated report has an identical structure, which is perfect for automated ingestion and retrieval systems.

Choosing Between FPDF2 and ReportLab

- FPDF2 for Speed and Simplicity: It excels at creating clean, text-heavy documents quickly. Ideal when performance and ease of use are priorities for generating parsable content.

- ReportLab for Power and Complexity: It’s the right tool when your documents require complex tables, charts, or custom vector graphics. This allows you to create rich, self-contained data visualizations that can be indexed and retrieved.

ReportLab is your go-to for embedding complex data structures directly into the PDF. If your document requires intricate tables with specific styling or data visualizations like bar charts, ReportLab provides the robust framework to build those elements programmatically, ensuring they are structured for accurate extraction.

Ultimately, both libraries empower you to generate a PDF with Python that is perfectly structured for machine consumption, directly improving the reliability of any downstream AI retrieval task.

Converting Web Content to PDF With Perfect Fidelity

When your source material is a dynamic webpage or a styled HTML report, low-level libraries fall short. They cannot replicate the complex interplay of HTML, CSS, and JavaScript that defines modern web content. This is where browser automation tools become essential for creating high-quality, retrievable documents.

To generate a PDF with Python that is an exact replica of a webpage, you must render it in a browser. Tools like Playwright control a headless browser to load and render the page perfectly before saving it as a PDF. This ensures that custom fonts, CSS layouts, and even JavaScript-driven charts are captured flawlessly, preserving the document's original structure and context for the RAG system.

Why Headless Browsers Are the Gold Standard for RAG Ingestion

Using a headless browser ensures that what you see on the screen is what your RAG system gets. This high-fidelity conversion is critical because it preserves the semantic structure of the original HTML. Headings (<h1>, <h2>), lists, and tables are all rendered correctly, which allows for more intelligent chunking strategies that respect the document's hierarchy. This is a massive improvement over older methods that often produced flat, poorly structured text. A more detailed guide to generating PDFs in Python covers this modern approach.

Capturing Dynamic Web Content with Playwright

Playwright simplifies the process of automating a browser to capture dynamic web content. You can navigate to a URL, wait for all asynchronous content to load, and then print the fully rendered page to a PDF. This is ideal for archiving web pages or creating reports from live dashboards for your knowledge base.

Here’s a practical example of capturing a live page as a PDF:

from playwright.sync_api import sync_playwright

def capture_webpage_for_rag(url, output_path):

with sync_playwright() as p:

browser = p.chromium.launch()

page = browser.new_page()

# Navigate to the URL and wait for the page to be fully loaded

page.goto(url, wait_until="networkidle")

# Generate a searchable PDF with background graphics

page.pdf(

path=output_path,

format="A4",

print_background=True,

margin={"top": "1cm", "bottom": "1cm"}

)

browser.close()

# Example usage

knowledge_base_url = "https://example.com/documentation"

pdf_output = "documentation.pdf"

capture_webpage_for_rag(knowledge_base_url, pdf_output)

The wait_until="networkidle" command is crucial here. It tells Playwright to wait until all network activity ceases, ensuring that any JavaScript-loaded content is fully rendered before the PDF is generated.

By using a real browser engine, you capture a high-fidelity snapshot that preserves the exact look, feel, and structure of the original source. This produces searchable, context-rich PDFs that are ideal inputs for knowledge bases where visual and structural context is vital for accurate retrieval.

Fine-Tuning Your PDF Output for RAG

Playwright's page.pdf() method offers options to optimize the output for retrieval:

- Page Layout: Standardize the

format('A4') andmarginto ensure consistency across all documents in your knowledge base. - Headers and Footers: Use

header_templateandfooter_templateto add metadata like the source URL or generation date, providing valuable context for the RAG system. - Content Control: Always use

print_background=Trueto include branded elements or visual cues that might aid in contextual understanding.

This method is the clear winner when your source is web-based and structural fidelity is a top priority for enabling high-quality retrieval.

Optimizing Your PDF Pipelines for Speed and Scale

<iframe width="100%" style="aspect-ratio: 16 / 9;" src="https://www.youtube.com/embed/B5XD-qpL0FU" frameborder="0" allow="autoplay; encrypted-media" allowfullscreen></iframe>Generating a single PDF is simple, but what if you need to generate a PDF with Python for thousands of documents to populate a knowledge base? This is where pipeline optimization becomes critical.

Scaling a document generation pipeline requires thinking about throughput, memory efficiency, and parallel processing. Without a solid strategy, high-volume generation can overwhelm your system, leading to slow processing times and failed jobs. For RAG systems that rely on up-to-date information, an efficient pipeline is non-negotiable.

Asynchronous Processing for High Throughput

The most effective way to scale PDF generation is to use an asynchronous approach. Instead of processing documents one by one in a blocking fashion, an async system can manage multiple generation tasks simultaneously.

This is a game-changer for batch jobs, such as converting an entire documentation site into PDFs for your RAG system. For high-volume tasks, async processing can improve throughput dramatically.

The key is to decouple the PDF generation task from the initial request. By using a task queue like Celery or Dramatiq, your application can offload the heavy lifting to background workers. This allows your system to ingest and process content sources efficiently, ensuring your knowledge base can be updated quickly and reliably.

Smart Memory and Resource Management

Generating complex PDFs can be memory-intensive. A single document with high-resolution images can consume significant RAM. To prevent system crashes during large-scale generation, smart resource management is essential.

- Write Incrementally: Use libraries that can stream the PDF to disk rather than building it entirely in memory. This keeps the memory footprint low and predictable.

- Optimize Images: Pre-process and compress images before embedding them. This simple step can drastically reduce the final file size and the memory required during generation.

- Cache Reusable Assets: Cache common assets like logos, fonts, or templates. Reusing these elements avoids the overhead of loading and processing them for every document.

These strategies are fundamental for building a robust pipeline capable of handling a demanding workload. Efficient generation is the first step in effective RAG pipeline optimization, as it ensures a steady flow of high-quality documents into your system.

Embedding Metadata for Smarter AI Retrieval

When you generate a PDF with Python, you are creating a data container. For RAG systems, embedding rich, structured metadata directly into the PDF is a superpower. It provides your AI with the precise context needed to filter and retrieve information with surgical accuracy.

This goes beyond standard fields like Author or Title. For RAG, you should embed custom, machine-readable data that helps filter, rank, and contextualize information during retrieval. Think of it as adding invisible, structured labels to your document that a vector database can use to narrow down search results before performing a semantic search.

Why Structured Metadata Is a Game-Changer for Retrieval

Injecting custom metadata transforms a simple PDF into a highly retrievable asset. Your RAG system can run precise, filtered queries, which dramatically reduces irrelevant results and improves the accuracy and trustworthiness of AI responses.

For example, you could embed a JSON object into the PDF's metadata with fields like:

- Source ID: A unique identifier linking the PDF to a database record or CMS entry.

- Document Type: Tags like

quarterly-report,technical-spec, orlegal-contract. - Audience: A field to specify the target audience, such as

internalorpublic. - Version: A version number or timestamp to ensure the AI retrieves the most current information.

This structured data is crucial when you build a knowledge base designed for advanced, filtered retrieval.

A Practical Example with PyPDF2

While many libraries support basic metadata, PyPDF2 provides a straightforward way to inject custom key-value pairs into an existing PDF. Suppose you've just generated an invoice and want to embed its status and client ID for targeted retrieval.

Here’s how you can add this metadata:

from PyPDF2 import PdfWriter, PdfReader

reader = PdfReader("original_invoice.pdf")

writer = PdfWriter()

# Copy all pages from the original PDF

for page in reader.pages:

writer.add_page(page)

# Add standard and custom metadata for retrieval

writer.add_metadata(

{

"/Author": "Automated System",

"/Title": "Invoice #12345",

"/x-custom-client-id": "CLIENT-9876",

"/x-custom-status": "unpaid",

"/x-custom-doc-type": "invoice",

}

)

# Save the new PDF with embedded metadata

with open("invoice_with_metadata.pdf", "wb") as f:

writer.write(f)

The

/x-custom-prefix is a convention for non-standard metadata fields. Once this document is indexed, your retrieval system can execute a query like "find all unpaid invoices for CLIENT-9876" by filtering on this metadata before performing a semantic search. This makes retrieval faster and orders of magnitude more accurate.

How do I handle complex layouts like tables for RAG?

One of the biggest challenges for RAG is accurately extracting data from tables. If a table's structure is mangled during PDF creation or parsing, the factual context is lost.

The solution is to use a library that treats tables as structured entities. ReportLab's

Tableobjects give you fine-grained control over cell structure. Alternatively, browser-based tools like Playwright render HTML<table>elements perfectly, ensuring the PDF preserves the structure needed for accurate data extraction and retrieval.

How do I manage custom fonts to ensure consistent parsing?

Inconsistent fonts can cause issues for OCR engines or layout-aware parsing models, leading to incorrect text extraction. The solution is to always embed your fonts.

- In ReportLab: Use

pdfmetrics.registerFontto include.ttffiles in your project. - With Playwright/WeasyPrint: Use standard CSS

@font-facerules. The rendering engine will handle embedding automatically.

This ensures your documents are visually consistent and machine-readable, improving the reliability of your data extraction pipeline.

Which library is fastest for high-volume generation?

Performance is critical when populating a large knowledge base. The best library depends on your document complexity.

For simple, text-heavy PDFs, FPDF2 is a speed champion due to its lightweight, pure-Python design.

For rendering complex HTML templates, a headless browser like Playwright is often more efficient at scale. While it has more startup overhead, it excels at handling complex CSS and JavaScript. Always benchmark your specific use case to find the right balance between features and raw performance.

Ready to transform your PDFs into retrieval-ready assets for your AI? ChunkForge provides the tools you need to create perfectly structured, RAG-optimized chunks. Try ChunkForge for free and see how easy it is to build a high-quality knowledge base.