A Developer's Guide to PDF to Markdown Converter for RAG

Unlock your RAG system's potential. Our developer guide covers using a PDF to Markdown converter with Python and OCR for superior data extraction and retrieval.

Turning a PDF into Markdown isn't just a file conversion. It's the absolute first, most critical step in building a Retrieval-Augmented Generation (RAG) system that actually works. A clean, well-structured Markdown document is the bedrock of retrieval accuracy; a messy conversion will kneecap your AI's ability to find what it needs. It’s the classic ‘garbage in, garbage out’ problem, but for Large Language Models.

Why Clean Markdown Is a Game-Changer for RAG

A RAG system pulls information from a knowledge base to give users accurate, context-aware answers. That knowledge base is built directly from your source documents. If that initial conversion from PDF is flawed, your entire system is built on a shaky foundation, directly impacting retrieval performance.

When a pdf to markdown converter spits out jumbled text, mangled tables, and forgotten headings, the data chunks you create are noisy and semantically hollow. This directly poisons the retrieval process. Vector embeddings created from that poorly structured text just won't have the contextual richness needed for precise similarity searches. When a user asks a question, your RAG system will likely pull up irrelevant snippets because the underlying data is a chaotic mess of broken sentences and misinterpreted layouts.

The Problem with Traditional PDF Tools

The market for PDF software is absolutely booming, thanks to digitization and the demands of remote work. In 2024, the global PDF software market was valued somewhere between USD 1.85 billion and USD 2.15 billion, and it's expected to climb significantly by 2033. You can explore more about the PDF software market trends to see what's driving this expansion.

But this growth hides a fundamental disconnect. Most PDF tools are built for human eyes. Their main job is to preserve visual fidelity—making sure the document looks perfect on a screen. They were never designed to produce clean, machine-readable content for an AI to parse.

This human-first design approach is what causes the conversion headaches that are so toxic for RAG:

- Lost Structural Hierarchy: Headings (

#), subheadings (##), and lists (-or1.) are what give a document its logical flow. A bad conversion flattens everything into plain text, completely wiping out the relationships between concepts and preventing structured, hierarchical chunking. - Mangled Tables: Tables often get turned into unreadable walls of text, with their row-and-column structure completely lost. The structured data inside becomes useless to the LLM.

- Inclusion of Noise: Unwanted junk like page headers, footers, and page numbers gets mixed into the main content, polluting your data chunks, degrading embedding quality, and throwing off the retrieval model.

Key Takeaway: For RAG systems, semantic accuracy is infinitely more important than visual perfection. A successful conversion is one that preserves the document's logical structure, ensuring the relationships between headers, paragraphs, and data points remain intact for a machine to understand and enabling high-quality retrieval.

The RAG-Ready Markdown Standard

At the end of the day, the goal of a specialized pdf to markdown converter for RAG is to generate semantically structured text. This means the output isn't just a blob of words; it's data that holds onto its original meaning and context, making it ideal for retrieval.

Clean Markdown paves the way for smarter chunking strategies. Instead of slicing documents at arbitrary token counts, you can split them along logical boundaries like sections and subsections. This approach ensures every chunk is a coherent, self-contained piece of information.

When your vector database is filled with these high-quality, context-rich chunks, your RAG system's ability to find relevant information and generate reliable answers goes through the roof. That first conversion step isn't just a nice-to-have; it's a non-negotiable prerequisite for high-performance retrieval.

Choosing the Right PDF to Markdown Converter Toolkit

Picking the right pdf to markdown converter isn't about finding a single tool that does it all. It’s about building a versatile toolkit. The right tool depends entirely on your source documents and how clean you need the output to be for your RAG system's retrieval accuracy.

What works for a simple, single-column article will absolutely choke on a 200-page scanned technical manual filled with tables and diagrams.

This choice is critical because it directly dictates the quality of the data going into your RAG pipeline. A poor conversion creates a mess of downstream problems—garbled text, inaccurate embeddings, and ultimately, useless search results. The goal is to match the tool to the specific job at hand, ensuring your final Markdown is structured, clean, and semantically intact.

Simple Tools for Simple Jobs

For straightforward, text-heavy PDFs with basic formatting, a simple tool can be surprisingly effective. Think of these as your first line of defense for uncomplicated documents.

- Online Converters: Perfect for quick, one-off jobs with non-sensitive documents. They’re fast and require zero setup, but they often butcher complex layouts and give you no control over the output. Use them for a quick look, but never for building a production RAG pipeline.

- Command-Line Power with Pandoc: For developers, Pandoc is a massive leap forward. It's a command-line workhorse that juggles a dizzying array of formats. While its direct PDF handling is rudimentary, its real power lies in cleaning up and converting from other formats into clean Markdown.

These tools are great starting points. But for the demanding needs of a high-performance RAG system, you'll slam into their limitations pretty quickly, especially with documents that weren't born digital.

Expert Insight: Don't just pick one tool. I often use a specialized library to pull raw text and then pipe it through Pandoc to standardize the Markdown structure. It’s all about using each tool for what it does best.

Programmatic Control for RAG Precision

When you need absolute, granular control to preserve a document's structure for optimal retrieval, programmatic libraries are the only real option. Python, in particular, has a fantastic ecosystem of tools that let you dissect a PDF element by element. This gives you the power to reconstruct its meaning accurately in Markdown.

This approach shifts you from being a passive user of a converter to an active architect of your conversion logic. Yes, it's more work. But the payoff in retrieval accuracy is huge. For anyone serious about building a top-tier RAG system, this level of control is non-negotiable.

Here are a few actionable libraries to anchor your toolkit:

- PyMuPDF (fitz): Incredibly fast and fantastic for extracting text along with its positional coordinates. This is essential for correctly reassembling text from multi-column layouts and for surgically removing headers and footers to reduce noise.

- pdfplumber: Built on

pdfminer.six, it has excellent features for extracting tables and pinpointing the location of text. It's my go-to when preserving tabular data is the top priority for retrieval.

Beyond just getting content into Markdown, it's also worth knowing about other transformation methods. For instance, some PDF to Link solutions can turn static files into trackable URLs, which can be useful in a broader document management strategy.

A Comparison of PDF to Markdown Conversion Methods for RAG

To build a reliable RAG pipeline, you need to understand the trade-offs between different conversion methods. The right choice depends on your specific documents, technical skills, and the level of precision your application demands.

| Conversion Method | Best For | Pros | Cons |

|---|---|---|---|

| Online Converters | Quick, non-sensitive, one-off tasks | Easy to use, no installation | Poor for complex layouts, security risks, no control over output |

| CLI Tools (e.g., Pandoc) | Developers needing automation for simple, text-based files | Scriptable, fast, versatile for format conversion | Limited PDF structure recognition, struggles with images/tables |

| Programmatic Libraries (Python) | Production RAG pipelines, complex documents | Full control over extraction, preserves structure for chunking, handles edge cases | Requires coding skills, more complex setup |

| OCR Integration (e.g., Tesseract) | Scanned PDFs, image-based documents | Makes inaccessible text readable and searchable | Slower, can introduce character errors, requires post-processing |

Ultimately, the most robust RAG pipelines often use a hybrid approach, leveraging programmatic libraries with OCR capabilities to handle the widest possible range of document types.

Tackling Scanned Documents with OCR

The final boss of PDF conversion is the scanned document—it's just an image of text, not actual text. Your standard converter is completely blind to its content. This is where Optical Character Recognition (OCR) becomes an indispensable part of your toolkit.

By integrating an OCR engine like Tesseract (usually via a Python wrapper like pytesseract), your script can "read" the text from these page images. The typical workflow involves using a library like PyMuPDF to render each PDF page as an image and then feeding that image to the OCR engine for text extraction.

Be warned, though: OCR isn't magic. The output quality is highly dependent on the scan's resolution, lighting, and layout. It almost always introduces small errors that require a dedicated cleaning and sanitization step. For RAG systems built on financial reports, historical archives, or scanned academic papers, a solid OCR workflow is non-negotiable. It's the only bridge to making this locked-away content available to your LLM.

Alright, let's move past the theory and get our hands dirty with some code. Building a solid pdf to markdown converter workflow for a RAG system isn't about finding some magical, one-click tool. It’s about being deliberate and using proven techniques to keep a document's original structure and meaning intact. This is where programmatic control is a game-changer for retrieval accuracy.

Just ripping out the raw text is a recipe for disaster. You lose all the context. The real win comes from reliably identifying and reformatting headings, nested lists, and tricky tables. When you preserve that structure, the Markdown you create becomes a high-fidelity map of the original document—perfect for smart chunking and accurate retrieval later on.

Keeping the Structural Hierarchy with Python

Headings and lists are the skeleton of a document; they guide the logical flow. If you lose that hierarchy, retrieval suffers because context is fragmented. It’s a mess.

Using a library like PyMuPDF, we can analyze text properties—like font size and weight—to programmatically put that skeleton back together. A really effective way to do this is to loop through the text blocks and classify them based on their font attributes. Big, bold text? Probably a heading. Smaller, regular text? That’s your paragraph content.

Here's an actionable game plan for improved retrieval:

- Extract Text Blocks: Use

PyMuPDFto pull out all the text blocks from a page, grabbing their content, coordinates, and font details. - Profile Font Sizes: Look at all the font sizes used in the document. You'll quickly spot distinct tiers that correspond to H1, H2, H3, and body text.

- Apply Markdown Formatting: Based on those font profiles, wrap the text in the right Markdown syntax (like

##for a second-level heading). This structure is crucial for heading-based chunking strategies.

This approach transforms a messy text dump into clean, organized Markdown that actually reflects the document's intended layout. If you want to dive deeper into the code, learning how to programmatically extract text from a PDF with Python will give you a solid foundation.

Taming Complex Tables

Tables are a notorious pain point for any pdf to markdown converter. Too often, they turn into an unreadable jumble of text, completely destroying the relationships between data points, making retrieval of tabular data nearly impossible.

Libraries like pdfplumber are built specifically for this headache. It’s excellent at detecting the lines and cell boundaries within a PDF, which lets it extract tabular data with its structure mostly intact. You can then reformat that data into Markdown tables using pipes (|) and hyphens (-).

Practical Tip: For ridiculously complex tables, sometimes the best retrieval strategy is to extract the table as a structured format like JSON or CSV and store it separately, linked via metadata. You can embed a summary in your Markdown for semantic search while keeping the raw data queryable for exact lookups.

The Overlooked (But Critical) Step of Sanitization

One of the most important steps people forget is sanitization. Raw text from a PDF is often littered with junk that pollutes your data and degrades embedding quality. I’m talking about repeating headers, footers, page numbers, and other artifacts from the PDF's layout.

Cleaning this stuff out programmatically is non-negotiable for producing RAG-ready Markdown. The good news is that these elements usually show up in the same place on every page, so you can use their coordinates to filter them out.

Think about a typical corporate report. The company name might be at the top of every single page, with the page number at the bottom. A simple but powerful sanitization script can be told to ignore any text blocks found within the top 10% and bottom 10% of a page's vertical space.

Here’s a quick cleaning checklist to start with:

- Remove Headers and Footers: Filter out text based on its y-coordinate.

- Fix Hyphenation: Find words broken across two lines and stitch them back together to preserve whole words for embeddings.

- Normalize Whitespace: Swap out multiple spaces or line breaks with a single one for clean paragraph breaks.

- Filter Out Artifacts: Use regular expressions to zap page numbers (like "Page 7 of 42") or other repeating junk.

A Python Snippet for Basic Sanitization

Let's look at a conceptual Python example using PyMuPDF that shows how to remove headers and footers. This isn't just about converting; it's about cleaning as you go to improve retrieval quality.

import fitz # PyMuPDF

def sanitize_and_extract(pdf_path):

doc = fitz.open(pdf_path)

full_text = ""

for page in doc:

# Define the content area to exclude top 10% and bottom 10%

page_height = page.rect.height

header_margin = page_height * 0.10

footer_margin = page_height * 0.90

# Get text blocks with their bounding boxes

blocks = page.get_text("blocks")

for b in blocks:

# b[1] is the top y-coordinate, b[3] is the bottom

block_top = b[1]

block_bottom = b[3]

# Check if the block is within the main content area

if block_top > header_margin and block_bottom < footer_margin:

full_text += b[4] # The text content of the block

doc.close()

return full_text

# Example usage

# clean_markdown = sanitize_and_extract("my_document.pdf")

# print(clean_markdown)

This simple script is just a starting point. By setting rules based on the document's layout, you can systematically strip away the noise. This ensures the final Markdown you feed your RAG pipeline is nothing but valuable, context-rich content, ready to be chunked, embedded, and retrieved with precision.

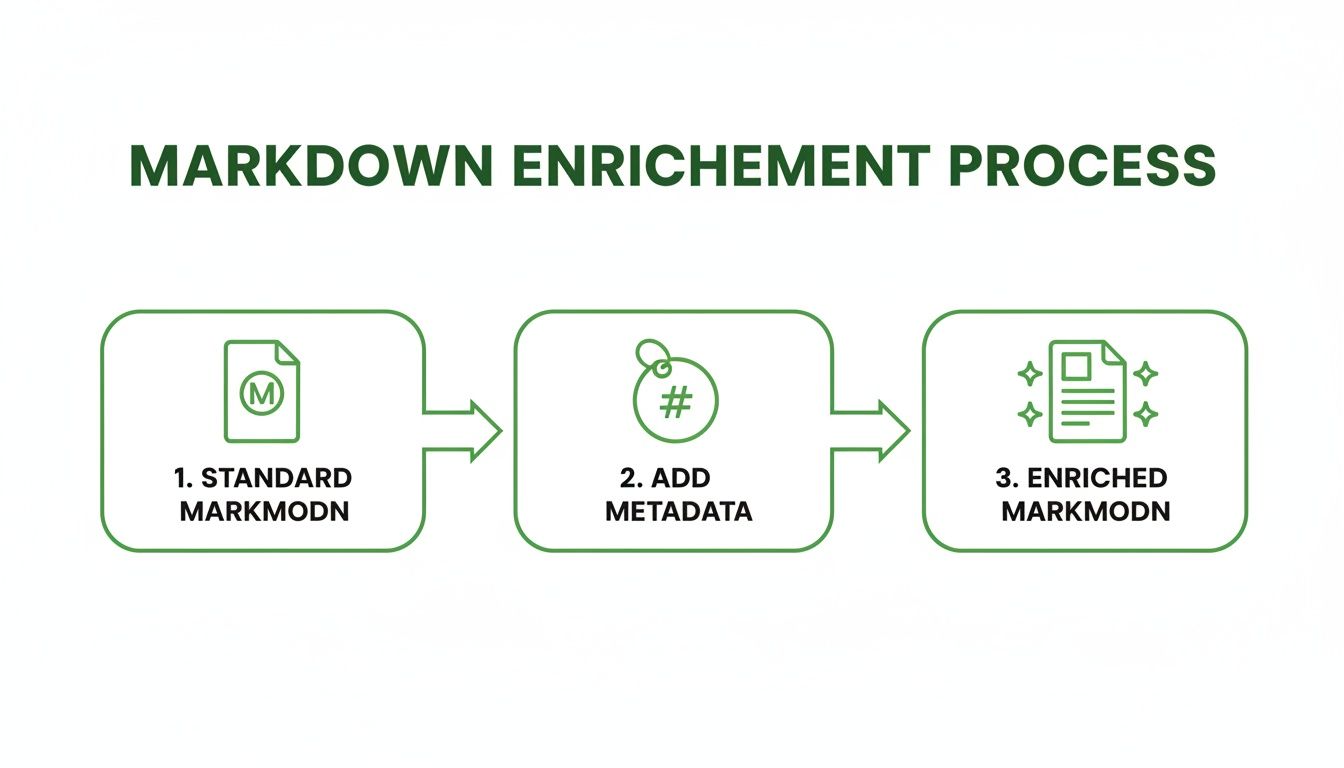

Enriching Markdown to Supercharge Retrieval

Getting your documents into clean, structured Markdown is a massive win, but it's really only half the job. Standard Markdown tells your system what the content is, but for a high-performance RAG system, you also need to know where it came from and why it matters. This is where enriching your Markdown with metadata comes in.

Enrichment is all about injecting that critical context directly into your documents. Think of it like adding digital post-it notes that your RAG system can actually read and use for filtering. This extra layer of information is what unlocks more precise filtering and retrieval, taking you beyond simple keyword searches into the realm of true semantic understanding.

Once you’ve nailed a clean conversion process, this is the next step to really supercharge your retrieval. It’s a crucial part of preparing data for indexing into a What Is a Vector Database, the engine that powers most modern RAG applications.

Why Metadata Is a RAG Superpower

Without metadata, every chunk of text is an island, completely disconnected from its source. When your RAG system pulls up a chunk, it has no idea if that snippet came from the introduction of a financial report or a footnote in an obscure academic paper. Adding metadata builds bridges between these islands, creating a rich, interconnected knowledge graph.

The need for this is more obvious than ever. The PDF editor software market is seeing explosive growth, with projections showing an expansion from USD 3.97 billion in 2024 to USD 17.71 billion by 2033. This surge isn't just about people wanting to view PDFs; it's about a growing demand for deeper document intelligence. You can discover more insights about PDF market growth on pdfreaderpro.com, but the takeaway is clear: we need tools that don't just convert documents—they must prepare them for smart systems.

Key Insight: Enriched Markdown lets your RAG system answer not just "what," but also "where from." The ability to cite sources, filter by document type, or prioritize information from specific sections is what separates a good RAG system from a truly great one.

By embedding this context, you enable powerful pre-retrieval filtering. Imagine asking, "What were the Q4 financial projections from the 2023 annual report?" A system using enriched Markdown can instantly filter for chunks with doc_type: 'annual_report' and year: 2023 before it even starts the semantic search. The result? A massive boost in both retrieval speed and accuracy.

Practical Strategies for Metadata Injection

So, how do you actually get this metadata into your files? The most common and effective way is with YAML frontmatter. It's just a small block of key-value pairs that sits right at the top of your Markdown file, neatly fenced off from the main content. It’s clean, easy for humans to read, and just about every tool out there knows how to parse it.

Here’s a quick look at what enriched frontmatter could look like after a smart conversion script has done its work:

---

source_file: "annual_report_2023.pdf"

original_page: 42

section_title: "Forward-Looking Statements"

doc_type: "financial_report"

author: "Corporate Finance Dept."

extraction_date: "2024-10-26"

---

This simple block packs a punch. Your RAG ingestion pipeline can easily parse this frontmatter and attach these key-value pairs as metadata to the vector embeddings for every chunk that comes from this document.

Automating Metadata Extraction

Of course, adding this information by hand is a non-starter at any real scale. The goal is to build this logic directly into your pdf to markdown converter process.

Here’s how you can think about automating it:

- File-Level Metadata: Your script can automatically grab the original filename (

source_file) and stamp it with the current date (extraction_date). - Page-Level Metadata: As the script iterates through the document, it should capture the page number (

original_page) and link it to the text extracted from that page. - Structural Metadata: When your parser hits a heading like "Forward-Looking Statements," it can apply that

section_titleto all the text that follows, right up until it finds the next heading. This allows for retrieval focused on specific document sections.

By building these metadata extraction steps into your conversion pipeline, every Markdown file you create is born ready for a high-performance RAG system. This systematic approach ensures your knowledge base isn't just a big pile of text, but a structured, queryable, and highly intelligent asset.

Building Your Automated RAG Ingestion Pipeline

<iframe width="100%" style="aspect-ratio: 16 / 9;" src="https://www.youtube.com/embed/pIGRwMjhMaQ" frameborder="0" allow="autoplay; encrypted-media" allowfullscreen></iframe>Alright, let's connect the dots. We've talked about all the individual pieces—conversion, sanitization, enrichment—but the real power comes from stringing them together into an automated workflow. This is where you move from one-off conversions to a robust, repeatable RAG ingestion pipeline that directly improves retrieval.

The goal is to build a system that can take a directory full of raw PDFs and, without manual intervention, turn them into structured, query-ready assets in your vector database. This isn't just about saving time; it's about enforcing quality and consistency. Every document gets processed the same way, leading to far more reliable retrieval results down the line.

From Raw PDF to High-Quality Markdown

First things first, you have to nail the conversion from PDF to clean, semantically rich Markdown. As we’ve covered, this means leaning on programmatic tools like Python libraries that can preserve the document's DNA—the headings, lists, tables, and code blocks—while intelligently stripping away the noise like page numbers, headers, and footers.

Don't underestimate this step. A sloppy conversion poisons the entire pipeline. If you start with messy, unstructured text, you'll spend all your subsequent steps just trying to clean it up. Get this right, and everything that follows becomes exponentially easier and more effective.

The idea is to transform a simple document into a much more valuable, intelligent asset, ready for your RAG system.

This diagram really hits home how adding that metadata layer is what makes your Markdown truly RAG-ready.

Intelligent, Context-Aware Chunking

Once you have pristine Markdown, it's time to chunk it. But we're not just crudely chopping text every 500 tokens. The name of the game is intelligent chunking. We want to create chunks that are small enough for an LLM’s context window but, more importantly, are complete, self-contained ideas.

The chunking strategy you choose has a massive impact on retrieval quality. Here are a few actionable approaches for better retrieval:

- Heading-Based Splitting: This is my go-to for well-structured documents. It uses the

H1,H2, andH3tags as natural break points. It's incredibly effective because it ensures you never split a logical section right down the middle, improving the contextual integrity of each chunk. - Semantic Chunking: For messier or less structured documents, this is a game-changer. It uses embedding models to group sentences by their meaning, creating chunks that are thematically cohesive even when the formatting is all over the place.

- Recursive Splitting: This is a clever, hierarchical approach. It tries to split text along a preferred list of separators—like double newlines (paragraphs), then single newlines, then sentences. It’s a great way to find the most logical breakpoints available in the text.

A Real-World Scenario: On a recent project building a RAG system for internal technical docs, we switched from basic fixed-size chunking to heading-based splitting. The result? Retrieval accuracy for specific feature-related questions shot up by over 20%. The chunks just made more sense because they mirrored the document's actual structure.

Tools like ChunkForge are built specifically for this part of the workflow, giving you a playground to test different strategies. If you want to dive deeper into this, our guide on document processing automation has some great insights.

Formatting and Ingesting into a Vector Database

The final leg of the journey is getting these smart, metadata-rich chunks into your vector database. This usually means packaging them up as JSON objects, which allows the database to index both the text content and all the valuable metadata you’ve attached.

Each JSON object should have the text of the chunk, of course, but also a metadata field containing everything you’ve extracted: the source filename, the original page number, the section heading, and any other custom tags you've added.

Here’s what a final JSON object might look like, ready for a database like Pinecone or Chroma:

{

"id": "annual_report_2023_chunk_001",

"text": "The forward-looking statements section outlines potential risks and uncertainties...",

"metadata": {

"source_file": "annual_report_2023.pdf",

"original_page": 42,

"section_title": "Forward-Looking Statements",

"doc_type": "financial_report"

}

}

When this gets embedded and stored, that metadata is stored right alongside the vector. This is what unlocks powerful hybrid search capabilities. Your RAG system can first filter results based on metadata (e.g., "only search financial reports from 2023") and then perform a semantic search on that smaller, more relevant subset. That two-step process is the secret to building a RAG pipeline that's fast, accurate, and ready to scale.

Common Questions About PDF to Markdown Conversion for RAG

When you're building a RAG pipeline, converting PDFs is where things often get messy. I've seen developers hit the same walls time and again. Let's walk through the most common questions and some field-tested answers to get you unstuck.

How Do I Handle Complex Tables from PDFs in Markdown?

Ah, tables. The classic PDF conversion nightmare. Your first move should be to grab a specialized Python library built for this, like camelot-py or pdfplumber. These are pretty good at figuring out basic row and column structures, which you can then wrangle into Markdown syntax.

But what about those truly awful tables with merged cells and nested structures? Don't despair. Here are actionable retrieval strategies:

- Serialize to Markdown: For simple tables, convert them directly to Markdown table format. This keeps the information inline and easily readable by an LLM.

- Convert to JSON: For complex tables, extract the data and structure it as a JSON object inside a Markdown code block. This preserves the structured data perfectly for programmatic use and allows the LLM to parse it as data rather than prose.

This way, even if the pretty formatting is gone, the actual data inside the table is preserved for accurate retrieval. For RAG, that's what really matters.

What’s the Best Way to Deal with Multi-Column Layouts?

If you've ever run a basic converter on a two-column PDF, you know the scrambled mess it creates. The text reads straight across the page, mixing up lines from both columns into gibberish.

To fix this, you need to get programmatic with a library that gives you coordinate-level access to the text. My go-to for this is PyMuPDF. It lets you see exactly where every word and line sits on the page.

The trick is to sort the text blocks logically before you piece them together. A simple but effective approach is to sort all text blocks first by their vertical position (the y-coordinate) and then by their horizontal position (the x-coordinate). This ensures your script reads all the way down the first column before it ever jumps to the top of the second one. Problem solved.

Can I Automate the Removal of Headers and Footers?

You absolutely can, and you absolutely should. Headers and footers are just noise for a RAG system and will degrade the quality of your embeddings and retrieval.

The most reliable way to automate this is to define a "content area" for each page. Think about it: headers and footers almost always show up in the same place. You can write a simple rule to ignore any text that falls within a certain margin of the page's edge.

For instance, a great starting point is to tell your script to just throw away any text it finds in the top 10% or bottom 10% of the page height. This kind of rule-based filtering works wonders on structured documents like academic papers or company reports, cleaning up your data before it ever hits the RAG pipeline.

Tired of scripting your way around PDF quirks? ChunkForge gives you the tools to convert, chunk, and enrich your documents with the precision you need for high-performing RAG systems. Stop wrestling with messy data and get straight to building.

Start your free 7-day trial of ChunkForge today and see the difference for yourself.