Extracting Text from PDF Python: A Guide for High-Quality RAG Systems

A practical guide to extracting text from pdf python using PyMuPDF, OCR, and parsing for robust RAG pipelines.

Extracting text from a PDF with Python is the foundational first step for building a high-performing Retrieval-Augmented Generation (RAG) system. While libraries like PyPDF2, PyMuPDF (fitz), or pdfminer.six provide the tools, the real challenge lies in producing clean, structured data that enhances retrieval accuracy. This isn't just a file-reading task; it's the critical process of transforming documents into a reliable knowledge base for your AI.

Why High-Quality PDF Extraction Is Critical for RAG

The success of any Retrieval-Augmented Generation (RAG) system hinges entirely on the quality of the contextual data it retrieves. Feeding it garbled, poorly structured text from your PDFs guarantees poor performance. This isn't a minor issue; it fundamentally cripples the system's ability to find relevant information and generate accurate, coherent answers for your users.

The core problem is the PDF format itself. Designed for visual consistency, it prioritizes layout over logical content structure. This design choice is the source of common extraction failures that can poison a RAG pipeline before it even begins.

The Downstream Impact of Poor Extraction

When your Python script fails to extract text accurately, the result is "noise" that pollutes your vector database. Garbled characters, sentences smashed together out of order, and missing tables all create corrupted text chunks. When a user query comes in, the retrieval model is more likely to fetch these useless, irrelevant fragments.

The old saying "garbage in, garbage out" has never been more true. A RAG system is only as smart as its knowledge base. Flawed extraction poisons that base, leading to weak, nonsensical, or just plain wrong LLM outputs. And that’s how you lose user trust.

For AI engineers, mastering PDF extraction is the cornerstone of building a reliable RAG system. A shaky foundation means everything built upon it will be unstable.

Here are the direct consequences for RAG performance:

- Weak Retrieval Accuracy: Critical keywords and semantic concepts are lost or broken apart, making it impossible for the retrieval model to match relevant chunks to a user's query.

- Contextual Misunderstanding: A jumbled layout might merge text from unrelated sections, tricking the LLM into making false connections and generating incorrect information.

- Incomplete Knowledge: Missing tables, footnotes, or sidebars create significant gaps in the knowledge base, preventing the model from providing comprehensive answers.

This guide provides actionable strategies to solve these problems. Building effective AI document processing workflows starts with clean, well-structured data. By focusing on high-fidelity extraction from the beginning, you provide your RAG system with the pristine, reliable information it needs to excel.

Choosing Your Python PDF Extraction Library

When you set out to pull text from a PDF with Python, your choice of library directly impacts the quality of the text you feed into your RAG system. This decision is a critical first step; the wrong tool can leave you fighting an uphill battle against messy data from the start.

The Python ecosystem offers several solid options, but three dominate for RAG-related work: PyPDF2, pdfminer.six, and PyMuPDF (fitz). Each has distinct strengths and is suited for different types of documents and project requirements.

PyPDF2: The Simple Starter

Most developers begin with PyPDF2. It's straightforward and effective for basic tasks like reading text from simple, digitally-native PDFs. If your source documents are clean, single-column reports without complex tables or layouts, PyPDF2 can deliver results with minimal code.

However, its simplicity is also its weakness for RAG applications. It struggles with complex layouts, often scrambling text from multiple columns or failing to extract it at all. For a production RAG pipeline that must handle diverse document formats, relying solely on PyPDF2 is often insufficient.

pdfminer.six: The Layout Analysis Expert

When preserving the original reading order and document structure is paramount for retrieval, pdfminer.six is the right tool. It excels at analyzing the precise position of every character, line, and figure. This capability is crucial for RAG because it allows you to reconstruct the document's logical flow, correctly identifying headers, paragraphs, and list items.

This deep analysis comes at the cost of speed and simplicity. The learning curve is steeper than PyPDF2, but the output is far cleaner and more logically structured, making it a strong choice for academic papers or legal documents where layout is integral to meaning.

PyMuPDF (fitz): The High-Performance All-Rounder

For production RAG systems that require both speed and precision, nothing beats PyMuPDF (fitz). As a Python binding for the C-based MuPDF library, it offers exceptional performance, making it ideal for processing thousands of documents. It efficiently extracts text, images, and metadata, providing the speed needed for scalable data pipelines.

The real magic of PyMuPDF is how it balances raw speed with detailed layout information. You can extract text blocks along with their coordinates, letting you preserve document structure without the performance drag of other layout-aware libraries.

Its top-tier performance has made it a favorite for enterprise AI projects. In fact, programmatic PDF extraction has become a core piece of modern data pipelines. Searches for PyMuPDF tutorials shot up by around 40% between 2022 and 2024 as more teams needed a fast, layout-aware solution for handling massive volumes of native PDFs. You can explore more insights on PyMuPDF's rise in popularity to see the trend.

So, which one is right for you? It’s all about the job at hand. For a quick and simple task, PyPDF2 is fine. For deep layout analysis where speed isn't the top priority, pdfminer.six is excellent. But for most production-grade RAG systems that demand speed, reliability, and accuracy, PyMuPDF is the clear winner.

To make the choice even clearer, here’s a quick head-to-head comparison of how these libraries stack up for a typical RAG workflow.

Python PDF Library Comparison for RAG

| Library | Key Strength | Best For | Layout Preservation | Speed |

|---|---|---|---|---|

| PyPDF2 | Simplicity, ease of use | Quick scripts, simple text extraction, metadata handling | Low (struggles with columns/tables) | Fast |

| pdfminer.six | Detailed layout analysis | Academic papers, complex reports where structure is key | High (preserves text flow and coordinates) | Slow |

| PyMuPDF (fitz) | Blazing-fast performance | High-volume batch processing, production RAG pipelines | Medium-High (extracts text with coordinates) | Very Fast |

Ultimately, picking your library is a trade-off between simplicity, structural detail, and raw speed. For any serious RAG application designed to handle a variety of real-world documents, the performance and versatility of PyMuPDF make it the go-to solution.

Handling Native vs. Scanned PDFs with Smart OCR

PDFs come in two main flavors: "born-digital" (native) documents with embedded, selectable text, and scanned documents, which are essentially images of text. A robust RAG pipeline must handle both intelligently. Using the same extraction method for both is a common mistake that leads to failure or low-quality, garbled output.

The optimal strategy is to first determine the type of PDF you're dealing with. A simple check can reveal if a page contains an embedded text layer. If it does, a fast library like PyMuPDF is the best choice for clean, accurate extraction. If not, it's a scanned page, and you must pivot to Optical Character Recognition (OCR).

The Hybrid Workflow Strategy

An efficient pipeline uses a hybrid strategy: start with the fastest and most accurate method (direct text extraction) and only fall back to the slower, more resource-intensive OCR process when necessary. This saves significant processing time, especially when dealing with large volumes of mixed-format documents.

Directly extracting text from a native PDF can achieve over 98% token recall, preserving the exact information. In contrast, open-source OCR tools like Tesseract typically achieve 80% to 95% accuracy, which varies based on image quality, resolution (DPI), and language. The hybrid approach ensures you only accept this accuracy trade-off for pages that have no machine-readable text.

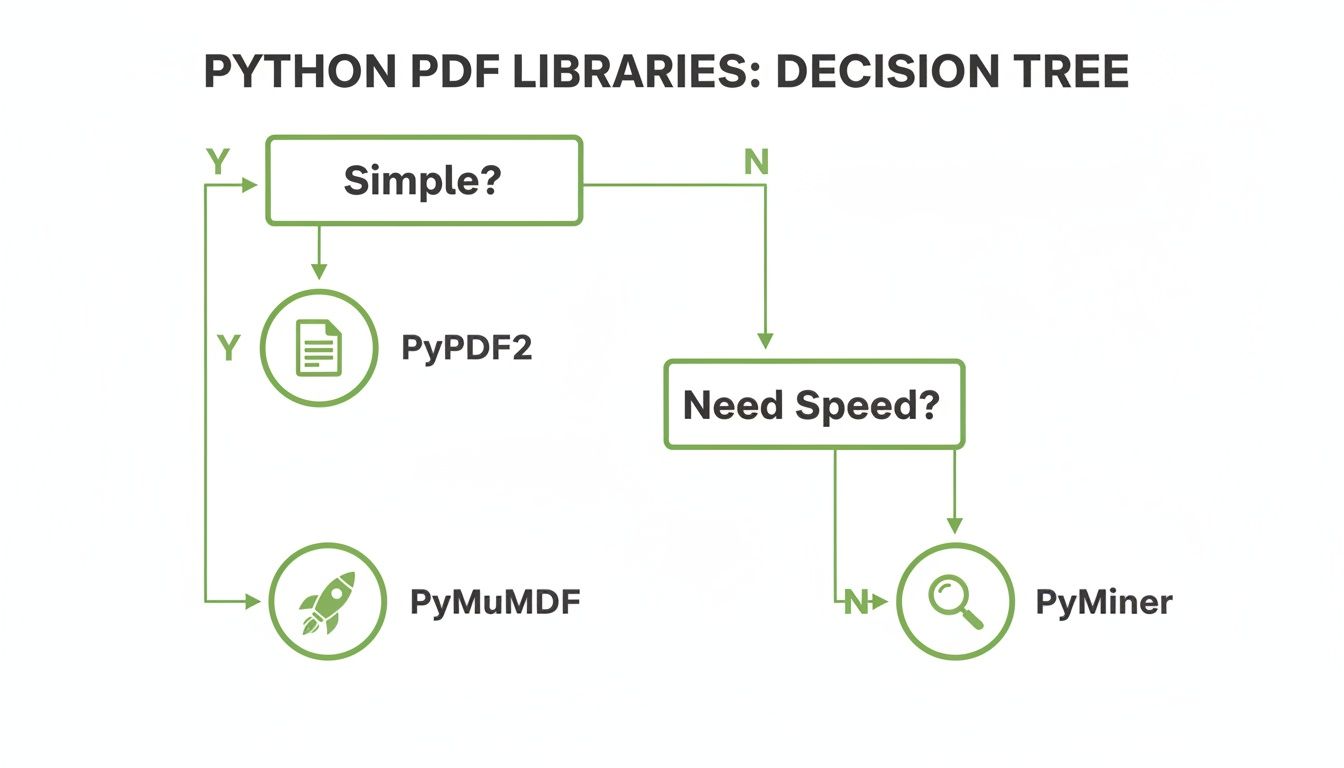

This decision tree gives you a simple mental model for picking the right Python library for the job.

As the flowchart shows, the best choice really comes down to balancing simplicity, speed, and how deep you need to go for your specific task.

Implementing OCR with Pytesseract

When your script identifies a page as an image, it's time to use an OCR engine. The go-to open-source option is Tesseract, accessed in Python via the pytesseract library. The workflow involves two main steps:

- Convert the PDF page to an image. Use a library like

pdf2imageto render a specific page into a high-resolution image object. - Run OCR on that image. Pass the image object to

pytesseract, which sends it to the Tesseract engine to recognize and return the text.

Pro Tip: OCR quality is directly tied to input image quality. Pre-processing images before sending them to Tesseract can dramatically improve accuracy. Increase the resolution to at least 300 DPI, convert the image to grayscale, and deskew it to straighten any tilted text. These small steps yield significant gains in text recognition.

This hybrid model ensures you're always using the right tool for the job. You get the speed and precision of direct extraction for native PDFs, plus a reliable (if slower) backup for scanned documents. This kind of intelligent routing is the key to building a robust system that can handle the messy reality of real-world documents.

And while OCR is great for text, other elements like tables often need their own specialized tools. For more complex layouts, you might want to check out our guide on extracting tables from PDF files.

How to Preserve Document Structure and Metadata

<iframe width="100%" style="aspect-ratio: 16 / 9;" src="https://www.youtube.com/embed/DSsqzKA_hPg" frameborder="0" allow="autoplay; encrypted-media" allowfullscreen></iframe>Extracting raw text is only half the battle. For a high-performing RAG system, context is king, and much of that context is embedded in the document's structure—its headings, lists, paragraphs, and tables.

Ignoring this structure is like tearing pages out of a book and shuffling them. The words are there, but their relationship and meaning are lost. This leads to fragmented, low-quality chunks that hurt retrieval relevance.

To build a truly effective retrieval system, you must move beyond flat text extraction. The goal is to capture the structural clues from the document's layout to create semantically meaningful chunks for your vector database.

Moving Beyond Plain Text with PyMuPDF

This is where a library like PyMuPDF truly excels. It doesn't just see a wall of text; it views a document as a collection of blocks, spans, and characters, each with its own properties. This granularity is precisely what you need to reconstruct the document's original hierarchy.

With PyMuPDF, you can access a wealth of metadata for every text element, including:

- Bounding Box Coordinates: The exact

(x0, y0, x1, y1)position of text on the page, critical for determining reading order and grouping related content. - Font Size and Style: Easily identify font size, weight (bold), and style (italic), which are powerful heuristics for differentiating headers from body text.

- Font Name: Different fonts often signal different content types, such as

Courierfor code blocks.

By leveraging this metadata, you can transform a simple "extract text from PDF" script into a sophisticated document parser that feeds high-quality, structured data to your RAG system.

A Practical Example of Identifying Headers

Consider a technical manual where section titles are consistently larger and bolder than paragraph text. A basic text extraction would flatten this structure, creating a single, confusing blob of text that mixes headings and content.

Instead, you can iterate through PyMuPDF's text blocks and use their properties to make intelligent inferences about their role. For example, a simple Python script can analyze the font size and style of each text block to flag potential headings.

Here’s a conceptual look at how you might implement this:

import fitz # This is the import for PyMuPDF

# Open your PDF

doc = fitz.open("your_document.pdf")

page = doc[0] # Get the first page

# Extract text with detailed structure

blocks = page.get_text("dict")["blocks"]

for block in blocks:

for line in block.get("lines", []):

for span in line.get("spans", []):

# A simple heuristic: check if text is bold and larger than a threshold

is_bold = span["flags"] & 2**4

is_large = span["size"] > 14

if is_bold and is_large:

print(f"HEADER: {span['text']}")

else:

print(f"BODY: {span['text']}")

doc.close()

This snippet demonstrates a powerful starting point. It checks if each text span is bold (span["flags"] & 2**4) and has a font size over 14 points, tagging it as a "HEADER." While not foolproof, this is a massive improvement over raw text extraction for creating structured chunks.

The core idea here is to use layout properties as proxies for semantic meaning. A larger font implies importance (a title), consistent indentation suggests a list item, and text organized into a grid is almost certainly a table.

By preserving this structural context, you’re no longer just extracting text; you're extracting knowledge. This enriched data allows you to create highly contextualized chunks for your RAG system. The result? When a user asks a question, the retrieved information is not just relevant but also structurally complete. This is what separates a good RAG system from a great one.

Transforming Extracted Text into RAG-Ready Chunks

Perfectly extracted text is just raw material. The next, and arguably more critical, phase for RAG is chunking: strategically breaking down the text into smaller, context-rich pieces. These chunks are the discrete units of knowledge your retrieval model will search. Poor chunking will sabotage your RAG's performance, serving up context that's either incomplete or irrelevant.

The goal is to create chunks that are semantically self-contained yet aware of their position within the broader document structure.

Selecting the Right Chunking Method

Your chunking strategy has a massive impact on retrieval quality. There is no one-size-fits-all solution; the optimal choice depends on your document's structure and the goals of your RAG application.

- Fixed-Size Chunking: The most basic method, splitting text every X characters or tokens. It's simple but often breaks sentences mid-thought, destroying semantic meaning. This approach is generally not recommended for RAG systems where context is key.

- Paragraph-Based Chunking: A much better starting point. Splitting along natural breaks like paragraphs or sections keeps related ideas together, preserving local context and creating coherent, meaningful chunks. This is a solid default strategy.

- Semantic Chunking: An advanced technique that uses embedding models to group sentences by their thematic similarity. This creates chunks that are semantically cohesive, even if the sentences were not adjacent in the original document.

For most RAG projects, paragraph-based chunking (or section-based, if you've identified headers) offers the best balance of simplicity and retrieval effectiveness. For a deeper dive, explore our guide on chunking strategies for RAG.

Enhancing Chunks with Overlap and Metadata

After choosing a chunking method, two techniques can significantly boost retrieval performance: overlap and metadata enrichment.

Implementing chunk overlap—including a small portion of the previous or next chunk in the current one—provides crucial context continuity. This prevents the loss of information at chunk boundaries and helps the retrieval model understand the relationship between adjacent pieces of text.

The real power move is enriching each chunk with metadata. This transforms a simple piece of text into a rich, filterable data object. Think of it like adding a detailed index card to every single chunk.

Attaching metadata makes your chunks exponentially more valuable for retrieval. You can embed critical information such as:

- Source Document: The name of the original PDF file.

- Page Number: The exact page where the text originated.

- Section Header: The heading or subheading the chunk belongs to, providing vital structural context.

With this metadata, your RAG system can perform filtered searches, provide precise source citations, and reconstruct the document's context on the fly. This is how a basic Python script for extracting text from a PDF evolves into a powerful data preparation pipeline for a truly intelligent AI system.

Common PDF Extraction Questions Answered

Building a robust PDF extraction pipeline for a RAG system inevitably involves tackling real-world challenges. From messy OCR output to tables that get mangled into gibberish, these are the common hurdles that can disrupt an otherwise effective workflow.

Let's address the most frequent questions that arise when trying to extract text from PDF files using Python for RAG applications.

How Can I Improve OCR Accuracy for Scanned PDFs?

This is a critical question for any RAG system ingesting scanned documents. Improving OCR accuracy almost always begins with image pre-processing. Before sending a page to an OCR engine like Tesseract, your primary goal is to provide the cleanest possible image.

Start by ensuring a resolution of at least 300 DPI (dots per inch). Then, apply computer vision techniques to enhance the image:

- Deskewing: Straighten pages that were scanned at an angle to ensure level text.

- Binarization: Convert the image to pure black and white to create a sharp contrast between text and background.

- Noise Removal: Eliminate random specks, shadows, and other artifacts that can be misinterpreted as characters.

You can further guide the OCR engine by providing explicit parameters. Specify the language (e.g., -l eng for English) and experiment with Page Segmentation Modes (PSMs). For example, PSM 6 (assume a single uniform block of text) is effective for simple paragraphs but will fail on multi-column layouts. Choosing the right PSM for the document's structure is key.

My Extracted Text Has Awkward Line Breaks. How Do I Fix This?

This common problem occurs because PDFs position text using coordinates, not logical paragraphs. Extraction libraries often preserve these positional line breaks, which fragment sentences and harm semantic meaning.

The solution is a post-processing step to intelligently rejoin broken lines.

A simple yet effective heuristic is to join any line that doesn't end with punctuation. A more sophisticated approach is to check if the subsequent line begins with a lowercase letter—a strong indicator that it's a continuation of the previous sentence. For words hyphenated across lines, write a function to remove the hyphen, merge the word parts, and validate the result against a dictionary.

The key takeaway is that raw extracted text is rarely clean enough for a RAG system. A dedicated cleaning and re-joining step is not optional; it’s a required part of any high-quality data preparation pipeline.

What Is the Best Way to Handle Tables in PDFs?

Tables are notoriously difficult. Standard text extraction tools see them as a jumble of text and numbers, destroying their tabular structure and making the data unusable for RAG.

For native PDFs, use a specialized library designed for table parsing. Tools like camelot or tabula-py can interpret the vector-based structure of tables and extract the data into a clean, structured format like a CSV or pandas DataFrame.

For scanned PDFs, simple OCR is insufficient. This is where advanced Document AI services excel. Cloud-based APIs from Google Cloud Vision or Amazon Textract are pre-trained to recognize and extract tabular data from images with high accuracy. They are an excellent choice when dealing with complex, image-based tables and are often a necessary investment for RAG systems that rely on this type of data.

Ready to stop wrestling with PDF parsing and start building? ChunkForge is a contextual document studio designed to convert your documents into perfectly optimized, RAG-ready chunks. Go from raw PDF to production-ready assets in minutes. Start your free trial at https://chunkforge.com.