How to Get Keywords from Text to Power Your RAG System

Discover how to get keywords from text using advanced extraction techniques that supercharge RAG system accuracy. Actionable insights for AI engineers.

You need to pull keywords from text to make your Retrieval-Augmented Generation (RAG) system smarter, but standard semantic search is falling short. The solution is to extract precise keywords and embed them as metadata. This actionable step transforms your vague document chunks into filterable assets, directly boosting retrieval accuracy and cutting down on the irrelevant noise that degrades your LLM's answers.

Why Your RAG System Is Missing the Mark

Even the most advanced Large Language Models (LLMs) are useless if they can't find the right information to begin with. This is the core challenge in RAG and where many systems fail. The gap between a user's query and the documents your system retrieves leads to generic or incorrect answers, undermining the entire purpose of your RAG pipeline.

This "retrieval gap" exists because simple semantic search, while powerful, isn't always enough. It’s excellent at finding documents that are thematically similar, but it often misses the critical, specific details needed for a precise answer.

The Problem with Vague Retrieval

Consider a common scenario: a customer support bot needs to find information about Error 0x80070057 within a large knowledge base of technical manuals. A standard semantic search might retrieve chunks about general "system errors" or "installation problems" because they share a similar vector space.

However, it could easily miss the one document that contains the exact error code, especially if that chunk is short or surrounded by less relevant text. The retrieval step gets close but fails to find the needle in the haystack. The result is a response that seems helpful but doesn't solve the user's problem—a classic failure in RAG retrieval that keyword-based filtering can solve.

Keywords as a Strategic Advantage

This is where the ability to get keywords from text becomes a game-changer for RAG retrieval. By extracting precise, meaningful keywords and attaching them as metadata to each document chunk, you enable a powerful hybrid search strategy. Instead of relying solely on vector similarity, you can now execute filtered queries.

By treating keywords as critical metadata, you transform vague document chunks into highly retrievable, filterable assets. This simple practice directly improves relevance, reduces noise, and elevates the quality of your RAG's answers.

This approach offers a direct path to better retrieval accuracy, the foundation of any high-performing RAG system. For more strategies on building a robust retrieval pipeline, see our guide to RAG pipeline optimization.

Extracting keywords and enriching metadata provides immediate, actionable benefits for your RAG system's retrieval performance:

- Improved Precision: You can filter for chunks containing an exact term—a product name, an error code, a specific function—guaranteeing the most relevant information is retrieved.

- Reduced Noise: By narrowing the search space before the semantic step, you prevent the LLM from being distracted by documents that are thematically related but factually incorrect.

- Enhanced Context: Keywords provide the LLM with immediate, explicit context about a chunk's content, helping it synthesize a more accurate and informed response.

Ultimately, mastering how to get keywords from text isn't just a text-processing task. It's a fundamental strategy for building a reliable RAG system that delivers answers users can trust.

Choosing Your Keyword Extraction Toolkit

Selecting the right method to get keywords from your text is a critical engineering decision. It directly impacts your RAG system's retrieval effectiveness. The goal isn't just to grab words, but to identify contextually relevant terms that serve as precise pointers to your data, enabling more accurate retrieval.

We'll explore four distinct approaches, each with its own strengths and ideal use cases within a RAG pipeline.

Statistical Methods: The Fast And Simple Approach

The traditional way to find keywords is based on statistical frequency. The classic example is TF-IDF (Term Frequency-Inverse Document Frequency). Its logic is simple: if a term appears frequently in one document but is rare across the entire collection, it's likely important.

For a RAG system, this means TF-IDF can quickly flag specific jargon, error codes, or product SKUs that are vital to one document but absent in others. It's extremely fast and easy to implement, making it a solid choice for initial filtering where speed is paramount.

The major drawback is its lack of semantic understanding. To TF-IDF, "laptop" and "notebook computer" are entirely different concepts.

Graph-Based Methods: Finding Influential Terms

Methods like TextRank, inspired by Google's PageRank algorithm, offer more sophistication. They model the text as a network where words are nodes and their co-occurrence creates connections. The algorithm then identifies the most "influential" words—those central to the document's main themes.

This technique is useful for RAG when you need to retrieve documents based on their core topics, not just specific terms. For a long user manual, TextRank might highlight "troubleshooting," "installation," and "configuration." It provides a high-level view of a chunk's content, suitable for broader thematic filtering.

In practice, graph-based methods often produce keywords that are better for broad topic filtering. While TF-IDF finds specific needles, TextRank finds the section of the haystack where those needles are most likely to be.

Embedding-Based Methods: The Semantic Powerhouse

This is where modern keyword extraction offers the most significant advantages for RAG retrieval. Embedding-based techniques use deep learning models like BERT to understand the meaning behind the words. These models map both the document and candidate keywords into a high-dimensional vector space.

The process involves comparing the vector of the entire text chunk with the vectors of candidate keywords. The keywords whose embeddings are closest (most semantically similar) to the document's overall meaning are selected.

This provides a massive retrieval advantage: you can identify semantically related keywords even if they aren't explicitly mentioned. A document discussing "CPU overheating" and "fan noise" might correctly yield "thermal management" as a key concept. For engineers building RAG systems, this is often the most powerful choice, as it gracefully handles the nuances of human language.

Supervised Models: For Hyper-Specific Needs

For highly specialized domains, the most precise approach is to build a supervised model. This involves creating a custom dataset where you manually tag the correct keywords for a sample of your documents. You then use this data to train a model (such as a fine-tuned version of BERT) to recognize keywords based on the patterns it learned from your examples.

While labor-intensive, this method delivers unparalleled precision for niche applications. For instance, a legal RAG system could be trained to only identify legal doctrines, case names, and statutes, ignoring all other terms. It is the ultimate solution when off-the-shelf methods are too noisy and fail to capture critical, domain-specific terminology.

To help you decide, here’s a quick-reference guide comparing these methods specifically for improving RAG retrieval.

Comparing Keyword Extraction Methods for RAG Systems

This table breaks down the core differences between the primary keyword extraction techniques, helping you choose the best fit based on your project's needs for complexity, speed, and contextual accuracy.

| Method | Core Principle | Best for RAG When... | Key Limitation |

|---|---|---|---|

| Statistical (TF-IDF) | Frequency and rarity | You need fast, simple extraction of specific, non-synonymous terms like error codes or product SKUs. | Lacks contextual and semantic understanding; treats synonyms as different words. |

| Graph-Based (TextRank) | Word co-occurrence and influence | You need to identify the main topics or central themes within a document chunk for broader categorization. | Can be less precise for extracting specific entities and is slower than TF-IDF. |

| Embedding-Based (BERT) | Semantic similarity | You need to capture the true meaning and context, finding conceptually related keywords even if not explicitly stated. | Computationally more expensive and requires more powerful hardware. |

| Supervised Models | Custom-trained classification | You have a highly specific domain where general models fail and need maximum precision and control over output. | Requires a significant upfront investment in data labeling and model training. |

Ultimately, choosing the right method is a classic engineering trade-off. You have to balance speed, cost, and just how deep of a contextual understanding your RAG system truly needs to perform well.

Putting Keyword Extraction into Practice

Theory is great, but actionable insights come from implementation. This is where we bridge the gap between understanding keyword extraction and using it to improve your Retrieval-Augmented Generation (RAG) pipeline. We'll walk through the practical steps that turn raw text into a powerful, searchable asset that directly enhances retrieval accuracy.

Let's ground this in a real-world project: building a Q&A system for a massive library of product documentation. The goal is to allow users to ask hyper-specific questions and get the exact answer. For this to work, your RAG system needs to zero in on the right document chunk. Keyword metadata is the key to enabling this precision.

Setting Up a Practical Workflow

First, you need content. Any text processing workflow begins with acquiring and cleaning your data. If you need to pull information from websites, this developer's guide to data extraction is a fantastic resource. Once you have your text, basic preprocessing—like lowercasing and removing special characters—ensures consistency.

Next, choose your tools. For this walkthrough, we'll use Python and keybert, a popular library that leverages BERT embeddings to find semantically relevant keywords. This approach provides the contextual awareness of modern embedding methods without the complexity of training a custom model.

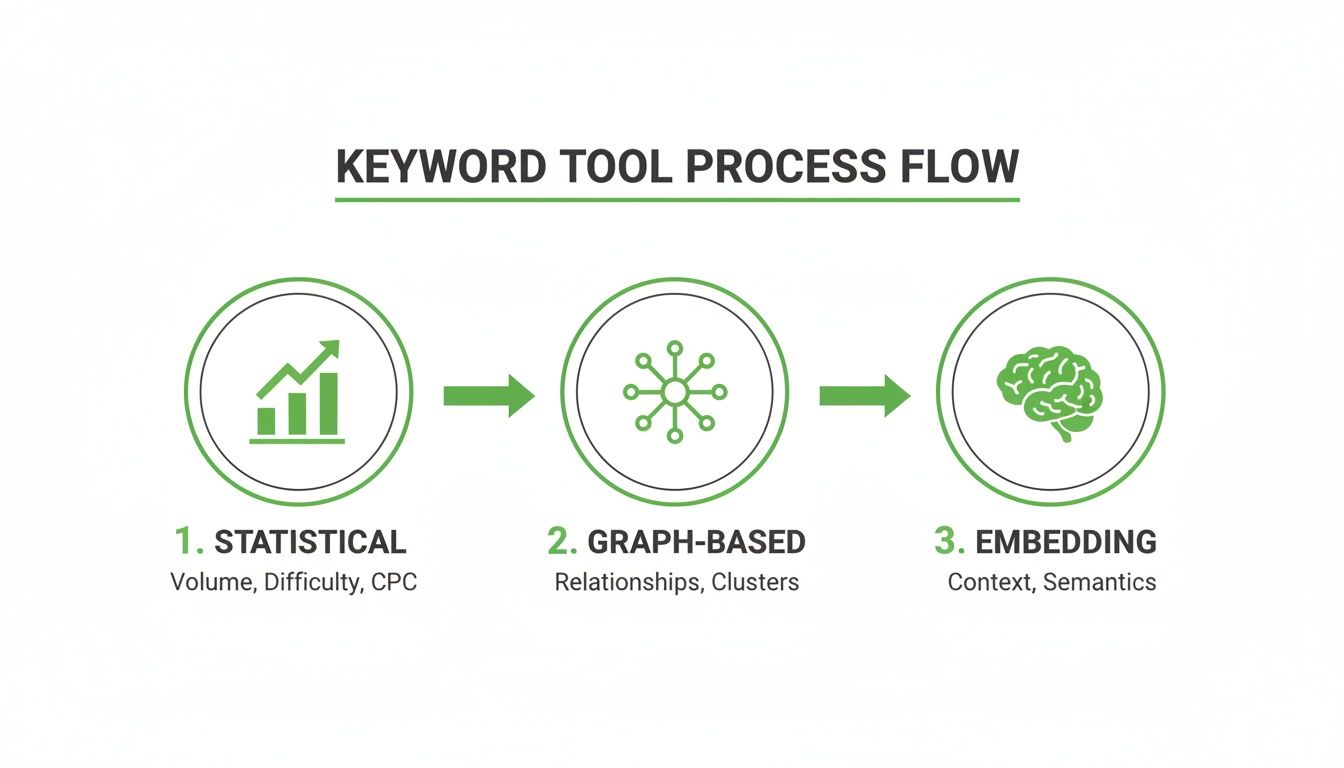

Each extraction method offers a different level of sophistication. This flow shows how they build on one another, from simple counts to deep semantic understanding, directly impacting retrieval capabilities.

This progression from basic statistical methods to advanced, AI-driven techniques allows you to build increasingly sophisticated retrieval systems that understand the true context of your documents.

From Text to Keywords with Code

Let's use a sample chunk of text from our hypothetical product documentation:

"The XT-500 model requires a full system recalibration after firmware update v3.2. Failure to do so may result in error code 79-B, indicating a peripheral mismatch. Ensure all connected devices are powered down before initiating the recalibration sequence to avoid data loss."

Using keybert is straightforward. Initialize the model and pass your text to it.

from keybert import KeyBERT

# Initialize the KeyBERT model

kw_model = KeyBERT()

# Our example text chunk

doc_chunk = """

The XT-500 model requires a full system recalibration after firmware update v3.2.

Failure to do so may result in error code 79-B, indicating a peripheral mismatch.

Ensure all connected devices are powered down before initiating the recalibration

sequence to avoid data loss.

"""

# Extract keywords with relevance scores

keywords = kw_model.extract_keywords(doc_chunk,

keyphrase_ngram_range=(1, 3),

stop_words='english',

top_n=5)

print(keywords)

This script will output a clean list of keywords, each paired with a relevance score that indicates how well it represents the chunk's core concepts.

The output will look something like this:

[('xt 500 model', 0.65), ('firmware update v3 2', 0.62), ('error code 79 b', 0.58), ('system recalibration', 0.55), ('peripheral mismatch', 0.51)]

This structured data is precisely what's needed for the next crucial step: enabling filtered search.

Attaching Keywords as Metadata in a Vector Database

Simply having a list of keywords isn't enough. The actionable insight is to attach them to your document chunks as metadata inside a vector database like Pinecone, Weaviate, or ChromaDB. This is what unlocks powerful filtered queries. When you embed and store a chunk, you include a metadata field containing these keywords.

The object you store in your vector DB should look like this:

- Vector Embedding:

[0.12, -0.45, 0.88, ...](the text’s semantic signature) - Content:

"The XT-500 model requires..."(the original text itself) - Metadata:

{"keywords": ["xt 500 model", "firmware update v3 2", "error code 79 b"]}

The magic happens when you combine semantic search with this metadata filtering. A user's query is no longer a simple vector search; it becomes a precise, two-stage process that skyrockets accuracy.

With this setup, your RAG system's retrieval logic becomes significantly more powerful. Instead of just performing a broad semantic search, it can first filter the entire database for chunks containing a specific keyword (like "error code 79 b") and then run the semantic search on that much smaller, highly relevant subset. This is how you find the needle in the haystack, every time, directly improving your RAG system's performance.

This technique is becoming a best practice for building modern RAG systems. Tools like ChunkForge are built on this principle, enriching chunks with precise keywords to supercharge retrieval pipelines. The impact is significant, as the global text analytics market, which powers such capabilities, is projected to grow from USD 14.9 billion in 2025 to USD 92.4 billion by 2035, a CAGR of 20.0%.

This metadata-driven approach can be extended further. For more advanced filtering, consider using Named Entity Recognition in NLP to extract specific entities like product names, dates, or locations.

From Good Keywords to Great Keywords

<iframe width="100%" style="aspect-ratio: 16 / 9;" src="https://www.youtube.com/embed/nvEZo_P_p1Q" frameborder="0" allow="autoplay; encrypted-media" allowfullscreen></iframe>Extracting an initial list of keywords is just the beginning. The actionable work is in refining that list to separate high-value signals from low-quality noise. This refinement process is what transforms a decent RAG system into a production-grade one that retrieves with high precision.

A raw keyword output is often cluttered with common but useless terms. For example, a document about software installation might yield keywords like "process," "system," and "file." While technically correct, they are too generic to be useful for filtering. Your first task is to identify and eliminate this type of noise.

Evaluating Your Initial Keyword Output

Before tuning, you need to understand what's working and what isn't. Start by combining human review with simple metrics. Grab a random sample of your document chunks and critically examine the keywords your model generated.

Ask these key questions to assess retrieval potential:

- Are they too broad? A keyword like "feature" is useless for filtering; "asynchronous API call" is highly effective.

- Are they too narrow? A term that appears only once in your entire dataset is unlikely to be searched for.

- Is there contextual noise? Are common domain-specific words that offer no filtering value, like "report" or "data" in business intelligence documents, slipping through?

This manual spot-check is invaluable for quickly identifying patterns of error. You might notice your model consistently extracts "customer support team" when the critical keyword is the specific product name being discussed.

For LLM engineers, this refinement directly impacts retrieval effectiveness. Keyword-enriched chunks can boost Retrieval-Augmented Generation (RAG) relevance by 25-35%, enabling the precise filtering needed in production. Tools like ChunkForge simplify this with real-time previews and JSON schemas, reflecting the 17.95% CAGR in vertical search engines designed for specialized semantics.

Actionable Tuning Techniques for Better Keywords

Once you identify weaknesses, apply targeted tuning techniques to systematically improve keyword quality and, by extension, RAG retrieval.

A great place to start is creating a custom stop word list. Standard lists remove words like "the" and "is" but are domain-agnostic. If you're working with medical documents, terms like "patient," "study," and "result" might appear frequently but add little filtering value. Adding these to a custom list forces your model to focus on more descriptive terms like "myocardial infarction" or "pharmacokinetic analysis."

Another powerful lever is adjusting your model's parameters. If using a tool like keybert, the n-gram range is critical. Setting it to (1, 3) allows the model to extract single words, two-word phrases, and three-word phrases. This is essential for capturing multi-word concepts like "API rate limit" or "asynchronous data transfer," which are far more valuable for retrieval than "API" or "data" alone.

Fine-tuning isn't a one-time fix. It’s an iterative cycle of extraction, evaluation, and adjustment. Each small tweak you make compounds, leading to massive gains in retrieval accuracy and overall RAG performance.

Finally, you can use LLMs to refine your keywords. After an initial extraction, feed the generated keywords into a powerful model with a prompt like: "Given these keywords from a technical document, remove generic terms and consolidate synonyms. Output a clean, de-duplicated list of the most specific concepts."

This adds a layer of semantic cleanup that parameter tuning alone can miss. The goal is to get beyond simple keyword counting. To really master this, it helps to understand modern strategies for keyword density; for instance, knowing how many keywords per page SEO to target is a good starting point for current best practices. We also cover the core concepts behind this in our article about understanding semantics in NLP.

Automating Keyword Enrichment with ChunkForge

While scripting your own keyword extraction is possible, it can quickly become a development and maintenance bottleneck. Managing dependencies, scaling the process, and ensuring consistent output across thousands of documents requires significant engineering effort.

This is where a tool like ChunkForge provides a direct solution. It integrates keyword extraction directly into your document processing workflow, allowing you to get keywords from your text without writing code. It transforms a complex engineering task into a simple configuration step, making advanced RAG preparation accessible.

Streamlining the RAG Preprocessing Pipeline

The workflow in ChunkForge is designed for efficiency. You upload your source documents (PDFs, Markdown, etc.) and choose a chunking strategy that suits your content.

Here's the key actionable step: alongside other settings, you simply enable "Extract Keywords." ChunkForge then runs an optimized model over every chunk, extracting the most relevant terms and phrases. These keywords are automatically attached as metadata, perfectly formatted for ingestion into your vector database.

The real win here is consistency and speed. You stop worrying about deploying models or paying for API calls. Instead, you get high-quality, repeatable keyword metadata for every chunk, every single time.

This tight integration streamlines the entire RAG preprocessing pipeline, saving significant development time that can be better spent on tuning the retrieval and generation stages.

Beyond Keywords to Multi-Faceted Retrieval

The most effective RAG systems don't rely on a single metadata type. While keywords are crucial, true retrieval power comes from layering multiple enrichment points to create a comprehensive profile for each document chunk.

For applied AI teams, this has a direct impact on performance. Just adding keyword tagging can boost RAG hit rates by 30-50%. That's a critical edge, especially as the demand for smarter AI keeps growing—something reflected in the growth of multimodal search engine capabilities.

ChunkForge is designed for this layered enrichment strategy, offering a suite of tools that work in concert:

- Automated Summaries: Generate a concise summary for each chunk to give the LLM quick context.

- Keyword Extraction: Add a list of precise terms for metadata filtering.

- Custom Metadata: Apply your own typed JSON schemas with specific tags, like

{"department": "engineering", "status": "approved"}.

This combination enables highly specific queries that pure semantic search cannot handle. An engineer can build a RAG system to find chunks that match a semantic query, contain the keyword "API," and are tagged with {"version": "2.1"}. This level of precision is essential for enterprise-grade AI, and automation is the only way to build these systems efficiently.

Common Questions About Keywords and RAG

When you're trying to pull keywords from text for a RAG system, a few practical questions always pop up. Nailing the answers to these can be the difference between a retrieval system that feels clunky and one that just works.

How Many Keywords Should I Extract Per Chunk?

Figuring out the right number of keywords is a classic balancing act. Too few, and you're leaving valuable filtering opportunities on the table. Too many, and you flood your metadata with noise, making retrieval less precise.

There’s no magic number here, but a solid starting point is between three and seven keywords per chunk. This range usually gives you enough density to capture the core ideas without grabbing every generic term that comes along.

The best approach is to experiment. Start with five, run some test queries, and see what you get. Are you missing relevant chunks? Maybe you need more keywords. Are irrelevant chunks showing up? You might need to be more selective.

Think of keywords as surgical tools. You want just enough to make precise incisions into your data without causing a mess. Start small and only add more when you can clearly justify the need.

Is Using a Big LLM for Keyword Extraction a Good Idea?

The temptation to use a massive model like GPT-4 for keyword extraction is real. These models have an incredible grasp of context and can pull out nuanced keywords that simpler methods would definitely miss. They can even infer concepts that aren't explicitly stated in the text.

But this power comes with some serious trade-offs, especially when you're building a production RAG system.

- Cost: API calls to the big models add up fast. Processing thousands, or even millions, of document chunks can turn into a very expensive operation.

- Latency: The round-trip time for an LLM API call is painfully slow compared to running a small, optimized model locally. This can create a major bottleneck in your data processing pipeline.

For most RAG applications, a smaller, specialized embedding-based model like the ones used by KeyBERT offers a much saner balance of performance, cost, and speed. It's best to save the big LLMs for offline analysis or maybe for refining a small, high-value set of keywords.

What Are the Biggest Mistakes to Avoid?

When you first start pulling keywords for RAG, it's easy to fall into a few common traps.

The most frequent mistake I see is relying on generic, out-of-the-box stop word lists. These lists have no idea what’s important in your specific domain. They might filter out critical industry terms or fail to remove jargon that’s common but useless for retrieval in your field. Always, always create a custom stop word list that’s built for your documents.

Another huge error is failing to normalize your keywords. If you don't, terms like "system configuration," "configure system," and "configuring the system" get treated as three different keywords. A simple stemming or lemmatization step can fix this, consolidating all those variations into a single, clean term. This makes your metadata much tidier and your filtering far more effective.

Ready to stop scripting and start building? ChunkForge automates keyword extraction and metadata enrichment, turning your raw documents into RAG-ready assets in minutes. Try it free and see the difference.