Mastering keywords from text: Boost RAG with smarter extraction

Learn how to extract keywords from text to power smarter RAG systems with practical insights, real-world examples, and developer-ready steps.

Extracting keywords from text is the process of identifying the most important, representative terms in a document. This practice creates a powerful metadata layer that enables AI systems to move beyond simple text matching, unlocking better search precision and deeper contextual understanding, which is especially critical in complex Retrieval Augmented Generation (RAG) applications.

Why Keywords Are Your RAG System’s Secret Weapon

Relying solely on semantic search in a Retrieval Augmented Generation (RAG) system is like using a map that only shows major highways. It gets you into the general vicinity, but you'll miss the specific side streets and landmarks needed to find your exact destination.

Vector embeddings are excellent for capturing broad, contextual meaning. However, they often struggle with niche terms, product SKUs, or specific identifiers that lack rich semantic relationships. This is where extracting keywords from text as metadata becomes an actionable strategy for improving retrieval.

Overcoming Semantic Search Limitations

To make this concrete, imagine a RAG system built for a technical support knowledge base. A user asks, "My system is throwing error E404-B after the latest firmware update."

A pure semantic search might retrieve documents about "firmware updates" or "general system errors," completely missing the most critical part of the query: the E404-B identifier. The vector for this specific code is not distinct enough from other error codes, causing the system to retrieve irrelevant information.

By embedding keywords into your chunk metadata, you enable a powerful hybrid search. This approach combines the pinpoint accuracy of keyword matching (sparse retrieval) with the broad contextual understanding of semantic search (dense retrieval).

Actionable Insight: Implement hybrid search by storing keywords alongside vector embeddings in your vector database. This ensures your RAG system can handle both nuanced, conversational queries and highly specific, term-based searches with equal precision.

Building a More Reliable AI Application

Adding keywords as metadata gives your RAG pipeline a direct, high-speed path to the most relevant document chunks. This provides immediate, practical benefits for retrieval:

- More Accurate Context: The Large Language Model (LLM) receives chunks precisely matched to the user's query, especially when specific terms are involved. This is a direct lever for improving context quality.

- Better LLM Responses: With higher-quality context, the LLM can generate answers that are far more accurate, relevant, and genuinely helpful.

- Increased Reliability: The system becomes less prone to "hallucinations" or giving vague answers when faced with specific, technical questions.

The need for this level of precision is driving significant market growth. The text analytics market is projected to hit USD 41.86 billion by 2030, a surge driven by the demand to extract useful data from unstructured text. This growth underscores how critical effective keyword extraction is for building high-performing AI.

Getting Your Text Ready for Keyword Extraction

The quality of the keywords you extract is a direct reflection of the quality of the text you start with. Feeding a model messy, raw text will result in noisy, irrelevant keywords. It’s a classic "garbage in, garbage out" problem that can directly sabotage your RAG system's retrieval performance.

Building a smart preprocessing pipeline is the first and most critical action you can take to get meaningful results.

This process is more than just basic cleaning; it's about strategically shaping your content to make important signals stand out. To do this well, it helps to be familiar with various qualitative data analysis methods, which focus on extracting meaningful insights from unstructured text.

Going Beyond Basic Stop Word Removal

Standard practice involves removing common stop words like "the," "is," and "a." While a decent starting point, a generic, one-size-fits-all list can easily backfire and harm retrieval.

Context is everything. In a legal document, the word "not" might be on a standard stop word list, but its removal could completely invert the meaning of a sentence. Similarly, in product reviews, words like "up," "down," "on," or "off" are crucial components of phrases like "shut down" or "turn on."

Actionable Insight: Don't use a generic stop word list. Start with a standard list, then customize it by removing words that hold specific meaning in your domain. This simple step yields significant improvements in keyword quality and retrieval relevance.

Stemming vs. Lemmatization: Which One Should You Choose?

After managing stop words, the next step is to normalize different word forms to their root. The two main techniques are stemming and lemmatization, and your choice has a direct impact on retrieval quality.

-

Stemming: A crude, rule-based process that chops off word endings. For example, "running," "ran," and "runner" might all be reduced to "run." It's fast but can produce nonsensical words (e.g., "studies" becomes "studi").

-

Lemmatization: A more sophisticated, dictionary-based approach. It uses morphological analysis to return a word to its base form, or lemma. For instance, it correctly identifies that the lemma for "better" is "good."

For most RAG applications where accuracy is paramount, lemmatization is the clear choice. The minor performance cost is a small price to pay for contextually correct base words, which leads directly to cleaner and more relevant keywords from text.

As you prepare your documents, our guide on understanding semantic chunking offers more strategies for preserving context. Implementing high-quality lemmatization with a library like SpaCy is a straightforward action that primes your text for optimal keyword extraction.

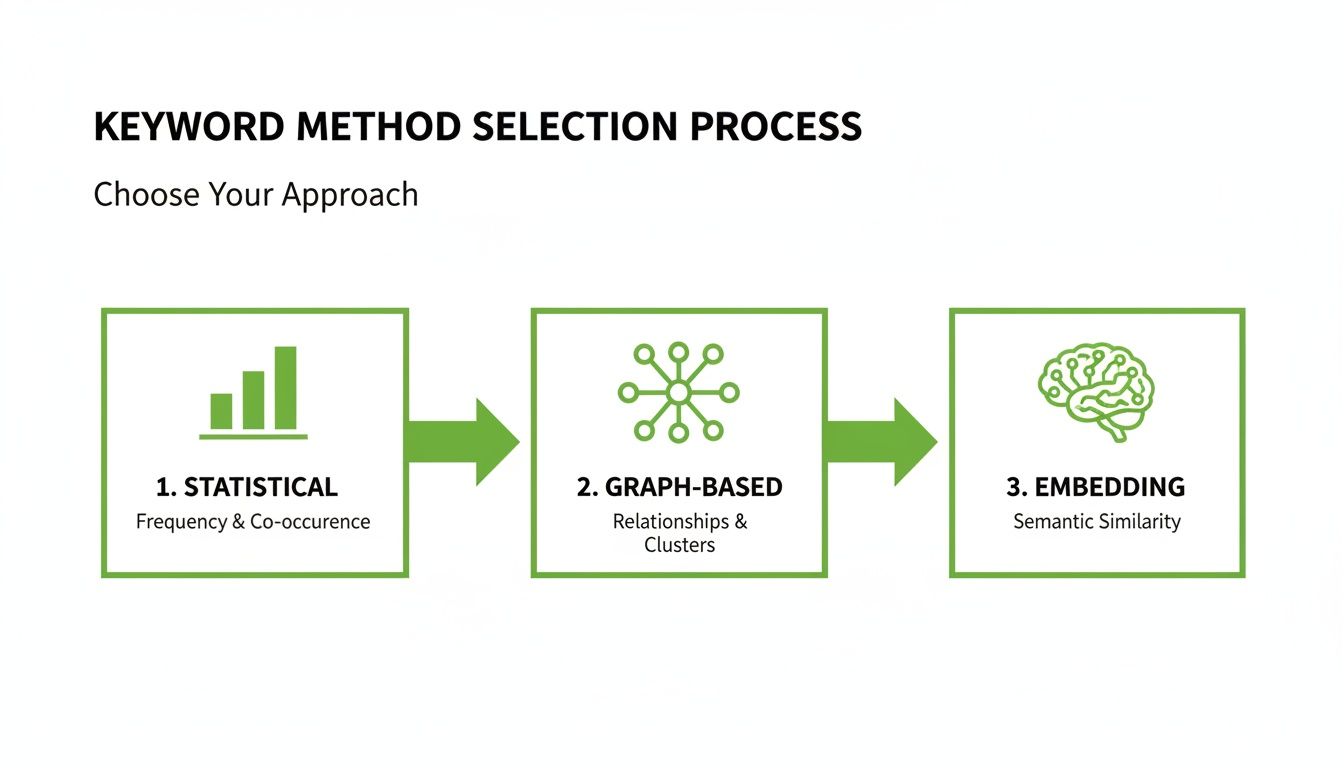

Choosing the Right Keyword Extraction Method

Selecting the right method to extract keywords from text is a strategic decision that directly impacts your RAG system's performance. The best method depends on your specific documents, performance requirements, and desired retrieval precision.

Understanding the trade-offs is key. A simple statistical model can process millions of documents quickly but may miss the subtle meanings that an embedding-based method would capture.

This is a widespread challenge. The keyword research tools market was valued at around $2.5 billion in 2025 and is expected to grow at a 15% compound annual growth rate through 2033. This trend highlights the critical need for effective keyword extraction to improve search and AI capabilities. You can read more about these market trends and their drivers to understand the full context.

Statistical and Graph-Based Approaches

Statistical methods like TF-IDF (Term Frequency-Inverse Document Frequency) are the traditional workhorses of keyword extraction. They operate on a simple principle: words that appear frequently in one document but rarely in others are likely important.

- Pros: Extremely fast, computationally cheap, and easy to implement.

- Cons: Lack any semantic understanding; "AI model" and "machine learning algorithm" are treated as completely different terms.

Graph-based methods like TextRank, inspired by Google's PageRank, are a step up. TextRank models a document as a graph where words are nodes connected by co-occurrence. This allows it to identify words central to the document's structure and even extract important multi-word phrases.

Actionable Insight: Use TF-IDF or TextRank when you need high-speed processing and a solid baseline for keyword metadata. They are ideal for initial filtering or in domains with consistent, unambiguous language.

Leveraging Semantic Meaning with Embeddings

This is where modern RAG pipelines gain a significant advantage. Embedding-based methods like KeyBERT use sophisticated sentence transformers to understand the meaning behind words. Instead of counting frequency, they identify phrases that are semantically closest to the core concepts of the entire document.

This approach is a game-changer for retrieval, as it can identify keywords that are conceptually linked to the text but may not appear verbatim. This is a major advantage when handling user queries that use synonyms or related concepts.

This visualization illustrates how KeyBERT operates by comparing candidate keyword phrases to the document's overall embedding.

The phrases with the highest cosine similarity are selected, ensuring that keywords are not just frequent but truly central to the document's message.

High-Precision Supervised Methods

For scenarios where you need to find a specific, predefined list of terms—such as product names, medical conditions, or company mentions—supervised methods offer the highest precision. This approach is typically framed as a Named Entity Recognition (NER) task.

You train a custom model on labeled data, teaching it to recognize specific categories of keywords. While this requires a greater upfront investment in creating annotated examples, the payoff is exceptional accuracy tailored to your domain. This is essential when a false positive could lead your RAG system to retrieve completely incorrect information.

To learn more, see our guide on Named Entity Recognition in NLP. This path provides ultimate control over the types of keywords from text you extract.

Comparison of Keyword Extraction Techniques

This table provides a quick reference to help you choose the right method for your RAG system.

| Method | Core Principle | Pros | Cons | Best For RAG When... |

|---|---|---|---|---|

| Statistical (TF-IDF) | Frequency-based counting | Fast, simple, scalable | No semantic understanding, poor with synonyms | You need a quick, scalable baseline for keyword metadata to enable sparse retrieval. |

| Graph-Based (TextRank) | Identifies central words via graph relationships | Finds phrases, some context awareness | Can be slow on very large docs, still lacks deep semantics | You want to identify key topics and multi-word phrases without heavy ML models. |

| Embedding-Based (KeyBERT) | Semantic similarity to document | Understands context and synonyms | Slower, requires an embedding model | Your retrieval must match queries based on meaning, not just exact word overlap. |

| Supervised (NER) | Pattern recognition from labeled data | Extremely high precision for known categories | Requires training data, less flexible for new terms | You must extract specific, predefined entities with maximum accuracy (e.g., product SKUs, identifiers). |

Each technique serves a purpose. The key is to align the method’s strengths with your project's goals—whether that’s speed, semantic richness, or surgical precision.

Putting Keywords to Work in Your RAG Pipeline

Extracting keywords from text is only the first step; the real value is realized when you intelligently integrate them into your Retrieval-Augmented Generation (RAG) system. This is how you implement a hybrid retrieval system that leverages the best of both worlds: the pinpoint accuracy of keyword search and the deep contextual understanding of semantic search.

The core action is to enrich your document chunks with keyword metadata. Instead of only storing a vector embedding for each chunk in a database like Pinecone or ChromaDB, you also attach a list of its most important keywords. This creates a powerful dual-pathway system for retrieval that is far more resilient than one based on vectors alone.

Architecting a Two-Stage Retrieval Process

A common and highly effective pattern is a two-stage retrieval process. This is a practical way to balance speed and accuracy without over-complicating your architecture.

Stage 1: Sparse Retrieval (Keyword Search). Initiate the process with a fast, lightweight keyword search. When a user query arrives, use a sparse retrieval algorithm like BM25 to quickly scan the keyword metadata. This step efficiently pulls a broad list of potentially relevant document chunks, excelling at catching specific product codes, jargon, or niche terms that a pure vector search might miss.

Stage 2: Dense Retrieval (Semantic Re-ranking). Next, run a semantic search, but only on the smaller, pre-filtered list of candidates from Stage 1. By applying your dense vector search just to these documents, you significantly reduce computational load and increase the probability of finding the best contextual match. For a deeper dive on building these systems, check out our guide on Retrieval-Augmented Generation.

Actionable Insight: Implement a two-stage retrieval funnel. Use a fast keyword search (BM25) to generate a candidate set, then use a semantic search to re-rank that set for final selection. This boosts both accuracy and efficiency.

This flowchart provides a high-level view for selecting an initial keyword extraction method.

Each approach offers a different balance of speed and contextual awareness. Your choice should be guided by the specific needs of your RAG pipeline.

Automating Metadata Enrichment

Manually adding keywords to every document chunk is not a scalable solution for any real-world application. The key to success is automating this critical step to build a retrieval-ready knowledge base.

The need for this automation is reflected in the market. The global data extraction market was valued at USD 5.287 billion in 2024 and is expected to rocket to USD 28.48 billion by 2035. This explosive growth is driven by the increasing demand to process unstructured data, where extracting meaningful keywords directly enhances the performance of AI applications like RAG.

Platforms like ChunkForge are designed to streamline this entire workflow. You can configure a document processing pipeline to automatically run keyword extraction models on every chunk during ingestion. This ensures your knowledge base is consistently enriched with high-quality metadata from the start, setting your RAG system up for success.

Measuring the Impact of Your Keyword Strategy

<iframe width="100%" style="aspect-ratio: 16 / 9;" src="https://www.youtube.com/embed/5fp6e5nhJRk" frameborder="0" allow="autoplay; encrypted-media" allowfullscreen></iframe>Implementing a keyword strategy is a good step, but how do you prove it improves retrieval? Without proper evaluation, you are merely guessing. This final step is crucial for demonstrating that your efforts to extract keywords from text are delivering real, measurable improvements to your RAG system's performance.

First, establish a baseline. Before deploying your keyword-enriched metadata, run a set of test queries through your existing system and carefully log the results. This provides a clear "before" state for comparison.

Building Your Golden Dataset

The most reliable way to measure impact is to create a "golden dataset"—a curated collection of representative user queries paired with the exact document chunks or answers a perfect system should retrieve.

Building this dataset requires upfront effort, but it provides a single source of truth for benchmarking any changes. A robust golden dataset should include:

- Common Queries: The typical questions that represent the bulk of user interactions.

- Edge Cases: Tricky, ambiguous queries with niche jargon that often cause retrieval failures.

- Term-Specific Searches: Queries that depend on matching an exact identifier, like a product code or error message.

With this dataset, you can run automated tests and calculate metrics like precision (how many retrieved documents were relevant?) and recall (how many of all relevant documents were found?) to get objective data on retrieval accuracy.

Actionable Insight: Create a golden dataset to serve as a final exam for your RAG system. Use it to systematically test and prove that your keyword strategy delivers measurably better retrieval results.

Evaluating the Entire RAG Pipeline

While retrieval metrics are a great starting point, they don't capture the full picture. The ultimate goal is not just to find better chunks but to generate a better final answer from the LLM. This requires end-to-end pipeline evaluation.

Frameworks like RAGAs (Retrieval-Augmented Generation Assessment) and ARES are designed for this purpose. They analyze the complete generation process and provide scores for key quality dimensions:

- Answer Relevancy: Did the generated answer directly address the user's question?

- Context Precision: Was the context provided to the LLM useful for answering the query?

- Faithfulness: Did the final answer adhere to the facts in the retrieved context, or did it hallucinate?

By comparing these pipeline-level scores before and after implementing keywords, you can definitively demonstrate that your strategy is enhancing not just retrieval, but the overall quality and reliability of your system's output.

Common Questions About Keyword Extraction for RAG

When implementing keyword extraction in a RAG pipeline, several practical questions consistently arise. Addressing them correctly is key to building a high-performing system.

The first challenge is often determining the optimal number of keywords to extract. It's a balancing act: too many can introduce noise and degrade retrieval, while too few may not provide a sufficient signal boost.

How Many Keywords Should I Extract Per Document Chunk?

There is no single magic number, but a practical starting point is 5-10 highly relevant keywords per document chunk. The goal is to capture the core concepts and unique identifiers without cluttering the metadata.

Actionable Insight: Start with 5-10 keywords per chunk and fine-tune based on your evaluation metrics. For dense, technical content, you may need more; for simpler text, fewer may suffice. Let your golden dataset guide you to the minimum number of keywords needed to see a significant improvement in retrieval precision.

Can I Use an LLM for Keyword Extraction?

Yes, using an LLM for keyword extraction can be highly effective due to its contextual awareness. A simple prompt to a model like GPT-4 can yield high-quality keywords that often surpass those from statistical methods.

However, there is a trade-off. Using an LLM to extract keywords from text is almost always slower and more expensive than traditional models. This approach is best suited for scenarios where semantic nuance is critical and latency is not a major concern, such as during an offline indexing process.

Should Keywords Be Single Words or Multi-Word Phrases?

For optimal retrieval, you need both. A hybrid approach is almost always the right answer. Single words (unigrams) are effective for matching broad topics, but multi-word phrases (n-grams) capture specific, high-value concepts.

The phrase "hybrid search strategy" is far more descriptive than the individual words "hybrid," "search," and "strategy" found separately. Fortunately, modern extraction libraries like KeyBERT and algorithms like TextRank are designed to extract these crucial multi-word phrases. To build the most powerful retrieval system, ensure you are extracting a healthy mix of both.

At ChunkForge, we take the complexity out of preparing documents for RAG. Our platform automates the entire metadata enrichment process, including keyword extraction, so you can build a perfectly optimized knowledge base with less effort. Start your free trial today and see how easy it is to create retrieval-ready chunks for your AI workflows.