A Developer's Guide to Parse PDF Python for RAG

Master how to parse PDF Python for RAG. This guide covers top libraries, advanced text extraction for tables and layouts, and RAG-ready data preparation.

If you're looking to parse a PDF with Python, you need to think beyond just yanking out raw text. The real goal is to preserve the document's original structure and context. This is the absolute foundation for any reliable Retrieval-Augmented Generation (RAG) system, because messy data going in means irrelevant or just plain wrong AI responses coming out.

Treating PDF parsing as a critical first step is what turns a pile of chaotic documents into a high-quality, trustworthy knowledge base for your LLM.

Why High-Quality PDF Parsing Is Crucial for RAG

When you're building a RAG system, the quality of your retrieval is only as good as the data you feed it. PDFs, which were designed for printing and visual consistency, are a notorious source of the "dirty" data that can poison your vector database. Just pulling out the text stream isn't nearly enough. In fact, doing that often destroys the very context your Large Language Model (LLM) needs to generate a decent answer.

Think about a financial report where a complex table gets flattened into a single, nonsensical paragraph of jumbled numbers and headers. Or an academic paper where crucial figure captions are completely detached from their images. When a user asks a question, the retrieval system pulls up these mangled text chunks and feeds the LLM garbage. The result? You almost always get a hallucinated or flat-out incorrect response, which completely erodes user trust.

The True Cost of Poor Parsing

This problem gets exponentially worse with the complex, real-world documents we all have to deal with. I've seen countless developers run into issues that their basic parsing scripts just couldn't handle, and it always leads to major headaches down the line.

- Multi-Column Layouts: A naive script reads straight across the page, mashing two unrelated columns into a single, incoherent mess. This breaks semantic context and leads to failed retrievals.

- Headers and Footers: Page numbers, confidentiality notices, and document titles get sprinkled into your main text, adding noise and diluting the actual meaning of your content.

- Scanned Documents: Without Optical Character Recognition (OCR), the text in scanned PDFs is completely invisible. This leaves massive, silent gaps in your knowledge base.

- Complex Tables and Charts: This is where the gold is often hidden. If you don't parse tables into a structured format like Markdown or JSON, all that valuable, organized data becomes useless for retrieval.

This is why getting PDF parsing right isn't just a technical chore—it's a strategic necessity. By investing the time to properly parse a PDF with Python, you ensure the context, structure, and relationships from the original document are kept intact. This high-fidelity data is the bedrock of any production-grade AI system that people can actually rely on. If you're new to the concept, you can learn more from our guide on what data parsing is.

The core principle is simple: clean, structured data in means accurate, trustworthy answers out. Every jumbled table or lost header you fail to correct during parsing is a potential point of failure in your RAG pipeline.

The demand for this kind of intelligent parsing is exploding. The PDF data extraction market is on track to hit $2.0 billion by 2025, largely driven by the massive data appetite of modern AI workflows. This isn't just a trend; it's a fundamental shift. Tools that can intelligently transform messy PDFs into clean, metadata-rich chunks are becoming non-negotiable for building serious enterprise AI. It’s all about turning a potential data liability into your most valuable asset.

Choosing Your Python PDF Parsing Toolkit

When you're building a Retrieval-Augmented Generation (RAG) system, the way you handle PDFs isn't just a technical detail—it's the foundation of your entire pipeline. Get it wrong, and you'll feed your AI jumbled text and broken tables, leading to inaccurate, unreliable responses. The goal isn't just to pull text out; it's to preserve the semantic integrity of the original document.

You need a tool that understands a document's layout—the difference between a paragraph, a table cell, and a page footer. This is what separates a quick-and-dirty script from a production-ready data pipeline that delivers real value.

The Contenders: A Quick Overview

For AI engineers, the field of Python PDF libraries really boils down to a handful of key players. Each has its own sweet spot when it comes to speed, accuracy, and features.

- PyPDF2 / pypdf: This is usually the first library developers try. It’s a workhorse for basic tasks like splitting and merging PDFs or reading metadata, but it often fumbles with complex layouts and can't always figure out the correct reading order. For RAG, this is a risky choice for anything but the simplest documents.

- pdfminer.six: A much more robust option. It’s known for its deep analysis of a document's layout, right down to the coordinates of individual characters. This makes it far better at reconstructing text flow, though its API can feel a bit clunky.

- pdfplumber: Built right on top of

pdfminer.six, this library wraps all that power in a much friendlier, object-oriented API. It absolutely shines at extracting tables and visual elements, making it a fantastic choice for structured data retrieval. - PyMuPDF (Fitz): This is the speed demon of the group. As a Python binding for the high-performance MuPDF library, it's incredibly fast and remarkably accurate for extracting both text and images. It's the go-to for any performance-critical RAG pipeline.

So which one should you pick? It really depends. Are you working with simple, text-based reports, or are you tackling dense, multi-column financial statements packed with tables? Your answer will point you to the right tool. For a deeper look at these options, check out our complete guide to the best Python PDF libraries.

Performance and Accuracy: What the Data Says

Gut feelings are nice, but for a RAG system, the decision has to be driven by data. The quality of your extracted text directly impacts the quality of your vector embeddings—garbage in, garbage out. Let's look at how these libraries stack up in real-world benchmarks.

The table below summarizes findings from independent benchmarks that stress-tested these libraries for speed and accuracy. The results reveal some pretty significant performance gaps.

Python PDF Library Performance and Accuracy Comparison

| Library | Average Text Accuracy | Table Extraction Strength | Key Feature | Best For RAG Use Case |

|---|---|---|---|---|

| pypdfium2 | 97% | Strong | High accuracy and speed | Demanding RAG pipelines needing the highest fidelity. |

| PyMuPDF (Fitz) | High (Implied) | Very Strong | Unmatched speed | High-throughput systems where performance is critical. |

| pypdf | 96% | Moderate | Ease of use, basic tasks | Simple documents with minimal layout complexity. |

| pdfminer.six | 89% | Moderate | Detailed layout analysis | Reconstructing complex text flow from source. |

| pdfplumber | 75% | Weak (Ironically) | User-friendly table API | Quick prototyping, but risky for complex tables. |

The numbers don't lie. While pypdf is reliable for basic jobs, its accuracy can dip. More concerning is pdfplumber, which struggles significantly, dropping to a mere 61% accuracy on complex tables. For a RAG system that relies on structured data, that's a deal-breaker. A library like PyMuPDF or a top-performer like pypdfium2 (which hit 97% average accuracy in one study) is a much safer bet. You can dive into more of these findings on evaluating Python PDF to text libraries.

When building a RAG system, prioritize extraction accuracy over ease of use. A few extra lines of code upfront to handle a more complex library like PyMuPDF will save you from countless hours of debugging poor retrieval results caused by faulty data ingestion.

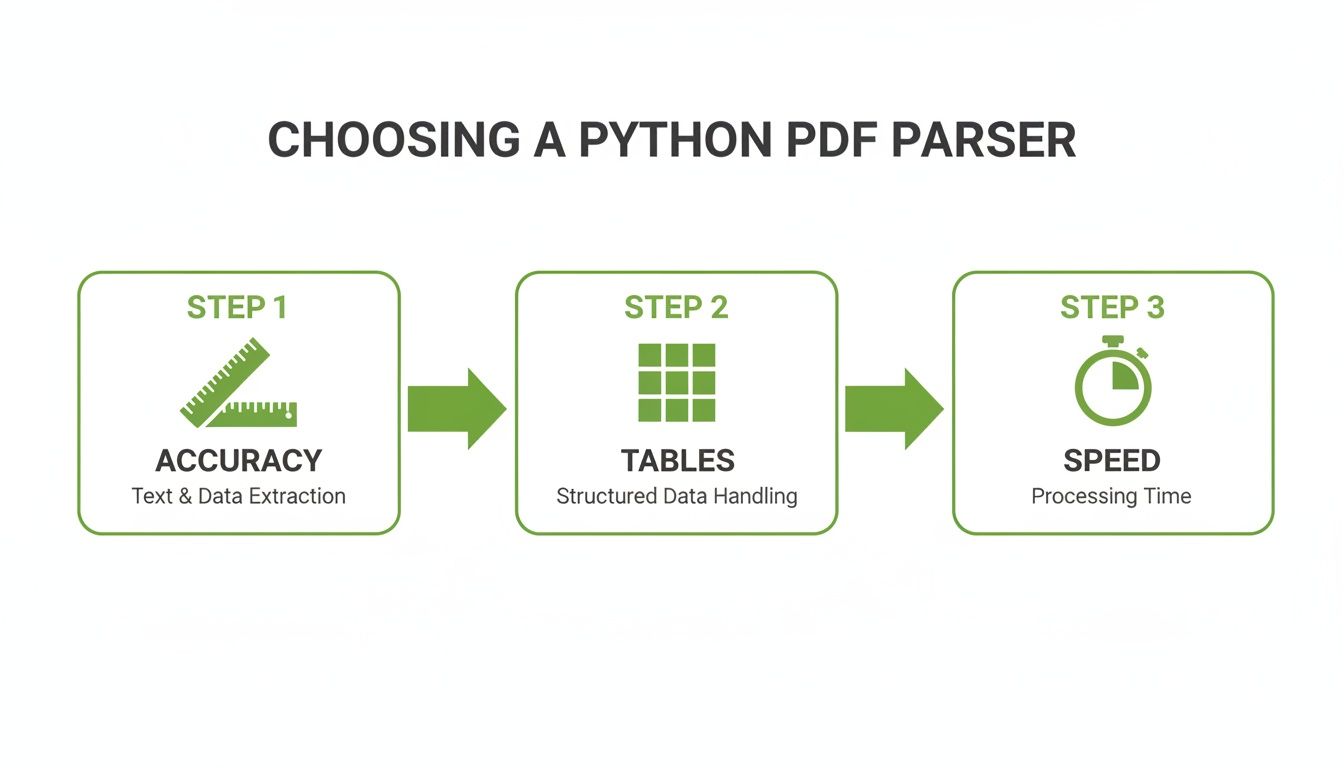

A Framework for Your Decision

So, how do you make the final call? Don't just pick the one with the most stars on GitHub. Your choice should be a direct reflection of your RAG pipeline's needs.

Ask yourself these questions:

- Document Complexity: Are my PDFs straightforward, single-column documents? Or are they filled with intricate tables, charts, and multi-column layouts? For anything complex, PyMuPDF or pdfplumber are built to handle that chaos better.

- Table Extraction Needs: Is pulling structured table data a core requirement? While

pdfplumberwas designed for this, PyMuPDF often proves more accurate and reliable across a wider range of documents. This is vital for RAG systems needing to answer questions about specific data points. - Performance Requirements: Am I processing thousands of documents in a batch pipeline where every second counts? PyMuPDF is the undisputed champion of speed and should be your default choice for high-throughput systems.

- Metadata and Low-Level Access: Do I need to dig deep into the PDF to pull out fonts, object streams, or detailed metadata? Libraries like

PyPDF2andpdfminer.sixgive you that low-level access for more forensic tasks.

By starting with a clear picture of your project's demands, you can choose the right foundation for your toolkit and avoid the common trap of picking a library that simply can't keep up with real-world documents.

A Practical Guide to Extracting Text, Tables, and Images

Alright, let's move from theory to the real work. If you're building a RAG system, you absolutely have to get three things right when parsing PDFs: clean text, structured tables, and relevant images. A slip-up in any of these areas will leave big, ugly holes in your knowledge base.

For this walkthrough, we’re going to lean heavily on PyMuPDF (you'll see it imported as fitz). Why? Because it hits a sweet spot between speed, accuracy, and versatility. It's a solid workhorse for tackling the messy reality of real-world PDFs, from academic papers to dense financial reports.

Extracting Clean Text with Context

Just ripping raw text out of a PDF is a rookie mistake. For RAG, context is everything. You have to preserve the structural clues—the headings, paragraphs, and lists—that tell your language model how different ideas connect. This is where PyMuPDF really shines, letting you pull text out in structured blocks instead of one giant, messy blob.

Think about parsing a multi-page company policy document. A naive text dump would smash it all together into an unreadable mess. A smarter approach keeps the document's natural flow intact.

import fitz # PyMuPDF

def extract_text_with_structure(pdf_path): doc = fitz.open(pdf_path) full_text = "" for page in doc: # Using "dict" is the key to preserving blocks and structure page_content = page.get_text("dict") blocks = page_content["blocks"] for block in blocks: # We only care about blocks that contain text lines if "lines" in block: for line in block["lines"]: for span in line["spans"]: full_text += span["text"] + " " # Add a newline after each block to simulate a paragraph break full_text += "\n" doc.close() return full_text

Example usage

clean_text = extract_text_with_structure("annual_report.pdf")

print(clean_text)

This method is miles ahead of a simple page.get_text(). It respects how text is visually grouped on the page. Each "block" usually corresponds to a paragraph or a heading, giving you a clean, logical structure that’s perfect for semantic chunking down the line.

Tackling the Challenge of Table Extraction

Tables are the bane of PDF parsing. They often aren't "tables" at all—just a bunch of text strings and lines floating near each other with zero underlying structure. If you can't extract them properly, your RAG system loses access to some of the most information-dense parts of your documents.

For specific, highly structured documents like financial records, a purpose-built tool like a bank statement converter to Excel can be a lifesaver. But for a more general approach inside your Python script, PyMuPDF has some surprisingly powerful table-finding features. We can even shuttle that data directly into a pandas DataFrame, which is perfect for RAG pipelines.

Here’s how you can find and pull out tables from a PDF:

import fitz import pandas as pd

def extract_tables_to_dataframe(pdf_path): doc = fitz.open(pdf_path) all_tables = [] for page in doc: # find_tables() is a fantastic, high-level feature in PyMuPDF table_list = page.find_tables() for table in table_list: # .extract() turns the detected table into a simple list of lists extracted_table = table.extract() if extracted_table: df = pd.DataFrame(extracted_table[1:], columns=extracted_table[0]) all_tables.append(df) doc.close() return all_tables

Example usage

financial_tables = extract_tables_to_dataframe("financial_report.pdf")

for i, table_df in enumerate(financial_tables):

print(f"--- Table {i+1} ---")

print(table_df.to_markdown())

This snippet automatically hunts for tabular structures on each page and turns them into clean DataFrames. My favorite trick is to then convert them to Markdown. It’s an excellent way to feed tables to an LLM because it explicitly preserves the row and column structure, which is critical for accurate retrieval on tabular data. For a deeper dive, check out our guide on extracting tables from PDFs.

The whole decision-making process for picking a PDF parser can be boiled down to a few key questions about what you truly need.

This really drives home the point that for RAG, getting the accuracy and table extraction right is often way more important than raw speed.

Handling Images and Basic OCR

Modern RAG systems are going multi-modal, meaning they need to understand images, not just text. Extracting images is the first step. And if you're dealing with scanned documents where the text is just a picture, Optical Character Recognition (OCR) is non-negotiable.

PyMuPDF makes it easy to grab the raw image data. From there, you can hand it off to an OCR engine like Tesseract using the pytesseract library.

Pro Tip: Don't just throw raw images at your OCR tool. A little pre-processing—like converting to grayscale, bumping up the contrast, and de-skewing—can make a massive difference. I've seen a 20-30% jump in character accuracy from just a few basic image tweaks.

Here's a simple workflow for pulling images and running OCR when needed:

- Find the Images: Loop through the PDF objects on each page to identify images.

- Extract the Bytes: Get the raw image data out and hold it in memory.

- Run OCR: Use a library like Pillow to open the image from its bytes and then have

pytesseractdo its magic.

import fitz import pytesseract from PIL import Image import io

def extract_images_and_ocr(pdf_path): doc = fitz.open(pdf_path) extracted_content = [] for page_num, page in enumerate(doc): image_list = page.get_images(full=True) for img_index, img in enumerate(image_list): xref = img[0] base_image = doc.extract_image(xref) image_bytes = base_image["image"]

# Use Pillow to open the image directly from the byte stream

image = Image.open(io.BytesIO(image_bytes))

# Now, perform OCR on the image object

ocr_text = pytesseract.image_to_string(image)

content = {

"page": page_num + 1,

"image_index": img_index,

"ocr_text": ocr_text.strip()

}

if content["ocr_text"]: # Only add if OCR found something

extracted_content.append(content)

doc.close()

return extracted_content

Heads up: You'll need to have Tesseract installed on your system for this to work.

ocr_results = extract_images_and_ocr("scanned_document.pdf")

print(ocr_results)

By combining these three targeted extraction methods, you're not just parsing a PDF; you're creating a complete, structured representation of it. This high-fidelity data—clean text, organized tables, and transcribed images—is the essential raw material you need to build a truly powerful and accurate RAG system.

Handling Scanned Docs and Complex Layouts

So far, we’ve been dealing with digitally-native PDFs, the easy stuff where the text is right there for the taking. But the real world is messy. You’ll be hit with scanned documents, multi-column nightmares, and pages cluttered with headers and footers that will absolutely poison your RAG system's knowledge base.

When you parse a PDF with Python in these scenarios, basic text extraction will fall flat. This is where you level up from simple parsing to intelligent document understanding. The goal is to dissect the visual layout and piece it back together into a clean, logical text flow that actually makes sense. Skip this, and you're just feeding your LLM noisy, out-of-order garbage.

Unleashing OCR for Scanned Documents

A scanned PDF is really just an image wearing a PDF costume. To a standard parser, it’s a blank slate with zero text. This is where Optical Character Recognition (OCR) becomes your most valuable player. Tesseract is the open-source king here, and luckily for us, it plugs right into PyMuPDF.

The process is straightforward: we'll pull each page out as an image and then hand that image over to the OCR engine to do its magic.

import fitz # PyMuPDF import pytesseract from PIL import Image import io

def ocr_scanned_pdf(pdf_path): doc = fitz.open(pdf_path) full_text = "" for page in doc: # Render the page to a high-resolution pixmap (image) pix = page.get_pixmap(dpi=300) img_bytes = pix.tobytes("png")

# Open the image from bytes and perform OCR

image = Image.open(io.BytesIO(img_bytes))

text = pytesseract.image_to_string(image)

full_text += text + "\n\n" # Add page break

doc.close()

return full_text

You'll need Tesseract installed on your system for this to work

scanned_text = ocr_scanned_pdf("scanned_invoice.pdf")

print(scanned_text)

A Critical Tip: Don't just throw the raw image at your OCR engine. A little bit of image preprocessing goes a long way. Simple tweaks like converting to grayscale, bumping up the contrast, or correcting page skew can slash character error rates by 20-40%, especially with lower-quality scans. Better OCR directly translates to fewer "misses" during retrieval.

Deconstructing Complex Layouts

Multi-column layouts, like the ones you see in academic papers or newsletters, are another classic trap. A naive parser reads straight across the page from left to right, mashing unrelated columns together into a nonsensical word salad.

The trick is to programmatically spot these columns and process them one by one. PyMuPDF is great for this because it gives you the coordinates of every text block. You can define the boundaries for each column and extract the text from each one separately to maintain the correct reading order.

A simple strategy looks like this:

- Find the Midpoint: Figure out the horizontal center of the page.

- Define Bounding Boxes: Create two rectangular "clip" zones—one for the left column, one for the right.

- Extract Sequentially: Run

page.get_text()twice, once for each clipped area, and then stitch the results together.

You can use the same logic to surgically remove headers and footers. By defining a "safe zone" for the main body of text (say, ignoring the top 10% and bottom 5% of the page), you stop that repetitive noise from polluting your dataset.

For AI engineers building RAG pipelines, this proves that basic parsing just doesn't cut it. For example, LlamaParse perfectly recreated tables comparing the Nasdaq-100 to the S&P 500, correctly capturing the 55% tech weighting in Nasdaq versus 22% in the S&P, along with 315% cumulative returns. An evaluation by Unstract confirms PyMuPDF’s advantage over PyPDF for complex documents, while tests on dev.to clocked pymupdf4llm at just 0.12s for markdown output—perfect for tools like ChunkForge's semantic chunking. You can find more of these advanced extraction tips on Parseur's blog.

Reconstructing the Logical Reading Order

At the end of the day, your goal is to produce a single, coherent stream of text that flows naturally. Once you've handled columns and stripped out the junk, you have to reassemble the content in an order that a human would actually read.

This often means sorting text blocks not just by their vertical position (y1 coordinate) but also by their horizontal position (x0 coordinate) within their respective columns. This careful reconstruction is vital. It ensures that when you later chunk this text for your vector database, each chunk represents a complete, logical idea, leading to far more accurate retrieval for your RAG system.

Turning Raw Text into RAG-Ready Chunks

Getting clean text out of a PDF is a huge win, but it's really just the starting line. The real magic for any RAG (Retrieval-Augmented Generation) system happens in the next step: how you break that text into meaningful pieces, or "chunks," for your vector database.

The quality of your chunking strategy is everything. It directly dictates how well your system can retrieve relevant information. If you get it wrong, even the most perfectly parsed text won't give your LLM the context it needs to generate a useful answer.

A common mistake is just slicing a document into fixed-size pieces. This is a fast way to end up with junk. You'll split sentences mid-thought, separate headings from their content, and completely destroy the logical flow between ideas. A much smarter approach is to focus on preserving the semantic integrity of the material. This means your chunks should represent complete, coherent thoughts.

Finding the Right Chunking Strategy

No two documents are alike, so a one-size-fits-all chunking method is a recipe for failure. Your choice has to match the structure of your content.

- Fixed-Size Chunking: This is the most basic approach where you just slice text into chunks of a set number of characters. It's simple, but it’s also contextually blind and almost always creates awkward, incomplete chunks, leading to poor retrieval.

- Paragraph-Based Chunking: A big improvement. Paragraphs usually contain a single, complete idea, making them a natural boundary for splitting text while keeping context intact.

- Heading-Based Chunking: This is perfect for structured documents like manuals or annual reports. You can group all the text under a specific heading (like an H2 or H3 section) into a single chunk, which does a great job of preserving the document's hierarchy. This allows for more targeted retrieval.

- Semantic Chunking: This is the most sophisticated method. It uses embedding models to group sentences based on how similar they are conceptually, creating chunks that are thematically tight, even if they vary in length. This often yields the best retrieval results.

To get a head start, you could even use a dedicated PDF Summarizer to distill key information from your documents, giving you a condensed, RAG-ready foundation to work from.

Putting Smart Chunking into Practice with Python

The key to intelligent chunking is respecting the document's original structure. A great way to do this is to first parse the PDF into a format that keeps these structural cues, like Markdown. From there, you can split the content based on Markdown headers. This simple trick ensures a chunk never straddles two different sections, keeping the context clean and focused.

Here’s a conceptual look at how you might do this with a library like langchain, splitting by Markdown headers:

from langchain.text_splitter import MarkdownHeaderTextSplitter

Imagine 'markdown_text' is the structured text from your parsing step

markdown_text = """

Annual Report Summary

Q1 Financials

Profits were up by 15%...

Q2 Projections

We anticipate growth in the European market... """

headers_to_split_on = [ ("#", "Header 1"), ("##", "Header 2"), ]

This splitter creates chunks based on the document's section hierarchy

md_splitter = MarkdownHeaderTextSplitter(headers_to_split_on=headers_to_split_on) md_header_splits = md_splitter.split_text(markdown_text)

The output is a list of chunks, each with its parent headers as metadata.

[{'content': 'Profits were up by 15%...', 'metadata': {'Header 1': 'Annual Report Summary', 'Header 2': 'Q1 Financials'}}]

This approach is so effective because every single chunk now carries its structural history along with it as metadata.

Why Metadata Enrichment is Non-Negotiable

The text inside a chunk is only half the story. The other, equally important half is the metadata you attach to it. Think of metadata as your secret weapon for building sophisticated retrieval strategies that go way beyond a simple similarity search. It lets you filter, route, and score search results with surgical precision.

Simply embedding raw text isn't enough. Rich metadata—page numbers, section titles, authors, publication dates—is what turns a basic vector search into an intelligent information retrieval system.

At a minimum, you should be capturing this metadata for every chunk:

- Source Filename: Where did this chunk come from?

- Page Number: Absolutely critical for letting users verify the source of an answer.

- Section Headers: The hierarchy of headings (

H1 > H2 > H3) that contain the chunk. - Document Type: Is it a financial report, a legal contract, or an academic paper?

The growth in Python's PDF ecosystem makes this all possible. We've come a long way since the early days of PyPDF. The intelligent document processing market hit $2.30 billion for a reason—it’s now a powerhouse for AI. That market is projected to grow to $2.96 billion because modern RAG systems need more than basic text parsing; they need sophisticated chunking and metadata to build knowledge bases that are genuinely useful.

By combining smart chunking with rich metadata, you're building a foundation for a RAG system that isn't just accurate, but also transparent and trustworthy.

Working with PDFs in Python for a RAG system inevitably throws a few curveballs your way. Let’s tackle some of the most common snags you'll hit and get your data pipeline running clean.

How Can I Improve OCR Accuracy on Scanned PDFs?

If you're getting garbage text from scanned documents, chances are your source images are the culprit. Low-quality scans are a huge source of errors. Before you even think about handing an image over to an OCR engine like Tesseract, you need to clean it up with a library like OpenCV or Pillow.

A little preprocessing goes a long, long way.

- Convert to Grayscale: This is the easiest first step and simplifies the image data.

- Increase Contrast: Makes the text pop against the background.

- Apply Binarization: This forces the image into sharp black and white, which OCR engines love.

- De-skew: Tilts and skews from the scanning process can trip up even the best OCR. Straighten it out first.

Running through even a couple of these steps can slash your character error rates and feed much cleaner, more reliable text into your RAG system.

What Is the Best Way to Handle Multi-Column Layouts?

Don't make the classic mistake of reading straight across a multi-column page. A naive script will just grab text line-by-line, mashing columns together into an incoherent mess.

The smart way to handle this is with a library like PyMuPDF that gives you the bounding box coordinates for every chunk of text. With those coordinates, you can programmatically define the regions for each column—say, the left half and right half of the page—and then pull the text out of each region one by one. This is the only way to preserve the correct reading order.

Think of it this way: you're treating each column as its own mini-document. This stops context from getting jumbled, which is absolutely critical for getting accurate results from your RAG application.

Which Library Is Best for Complex Tables?

When the tables get tricky, PyMuPDF (Fitz) is usually your most reliable bet. It's just more robust for complex layouts.

While a tool like pdfplumber has a friendly API, its accuracy can sometimes take a hit on more complicated tables. The PDF parsing world has come a long way, but this is still a major challenge. Some analyses have shown pdfplumber’s performance on tables can be anywhere from 61-97%, and that kind of variability can be a real problem for data-heavy RAG systems. You can dig deeper into these performance metrics and find out more about the best APIs for data extraction.

Why Do I Get Gibberish Text From Some PDFs?

Nine times out of ten, this is a classic encoding issue. PDFs can use a whole smorgasbord of character encodings. If your script just assumes everything is UTF-8 but the file is using something else, you’ll get garbled, nonsensical characters.

Most modern libraries are pretty good at figuring this out automatically. But if you're stuck, look for an option in your library to either specify the encoding directly or, more practically, to handle decoding errors gracefully. You can often tell the parser to just ignore or replace characters it can’t read, which at least prevents the whole script from crashing.

Ready to turn messy PDFs into perfectly structured, RAG-ready assets? ChunkForge provides a visual studio to parse, chunk, and enrich your documents with deep metadata. Start your 7-day free trial and accelerate your path from raw data to production AI. https://chunkforge.com